Build a simple Raspberry 3.5 inch dashboard with Warp 10, controlling the framebuffer from WarpScript instead of using X.Org and heavy stuff!

If you're a regular reader, you already know we connected our BeerTender to Warp 10, using LoRa network and MQTT plugin. And if it's the first time that you hear about Warp 10, here is a short video to know more about our open source time series database: watch the video.

That was a nice challenge, but the raspberry 3 in our server cabinet is really underloaded. And reading the beer level needs to start a computer or smartphone, connect to local Wi-Fi, then launch a WarpScript. Not very user-friendly, and that leads to catastrophic empty beer barrel situations. Raising a slack alert is possible. But this alert might be ignored. We need a true IoT dashboard with a screen, close to the BeerTender!

Screen for Raspberry Pi 3: There are a lot of them. I bought a cheap 3.5 Inch 480×320 screen.

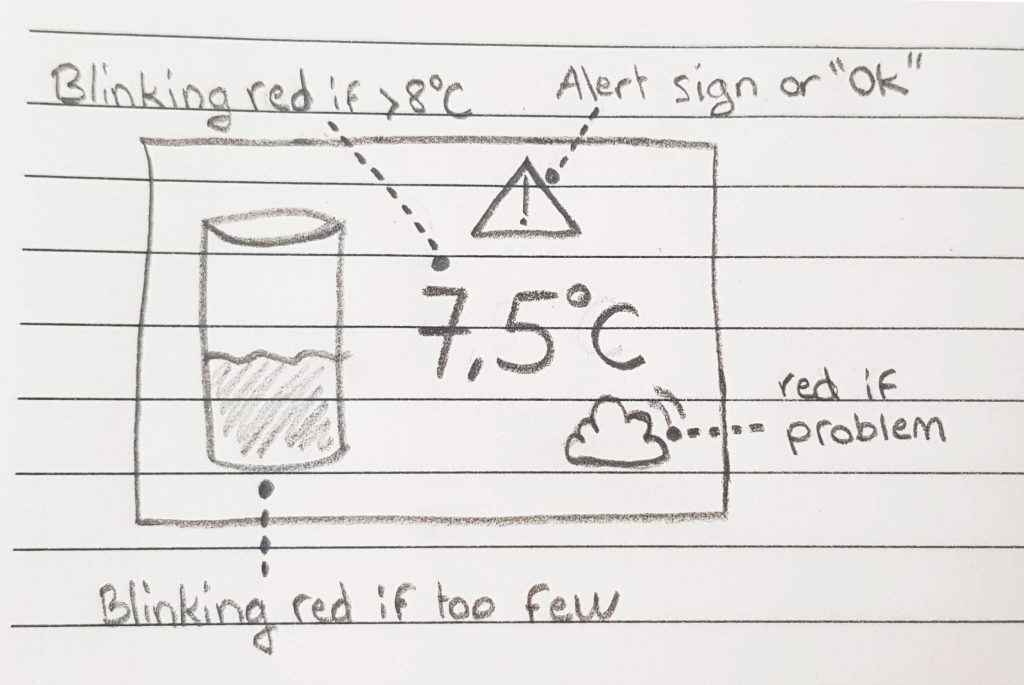

It is time to do a nice dashboard… I need beer level, beer temperature, LoRa alert if there is a network failure. Easy to sketch!

Building a timeseries dashboard with Raspberry Pi?

The Web expert way:

- Our web expert answer: no problem, I will do this in a web app, with npm, webpack, babel, react, packaged in electron with a nodeJS backend!

- Me: OK, so you need X.Org, maybe a window manager + 300 MB of free RAM, to launch a 100 MB binary… To display a beer level.

- Our web expert: But with CSS, I can draw animated bubbles in the barrel!

- Me: Why not a WebGL shader…

- Our web expert: Great! Good Idea!

- Me: …

My way:

When I was working for automotive, I was used to screenshot the framebuffer on high-end TFT dashboards. Because there was no graphic layer at all.

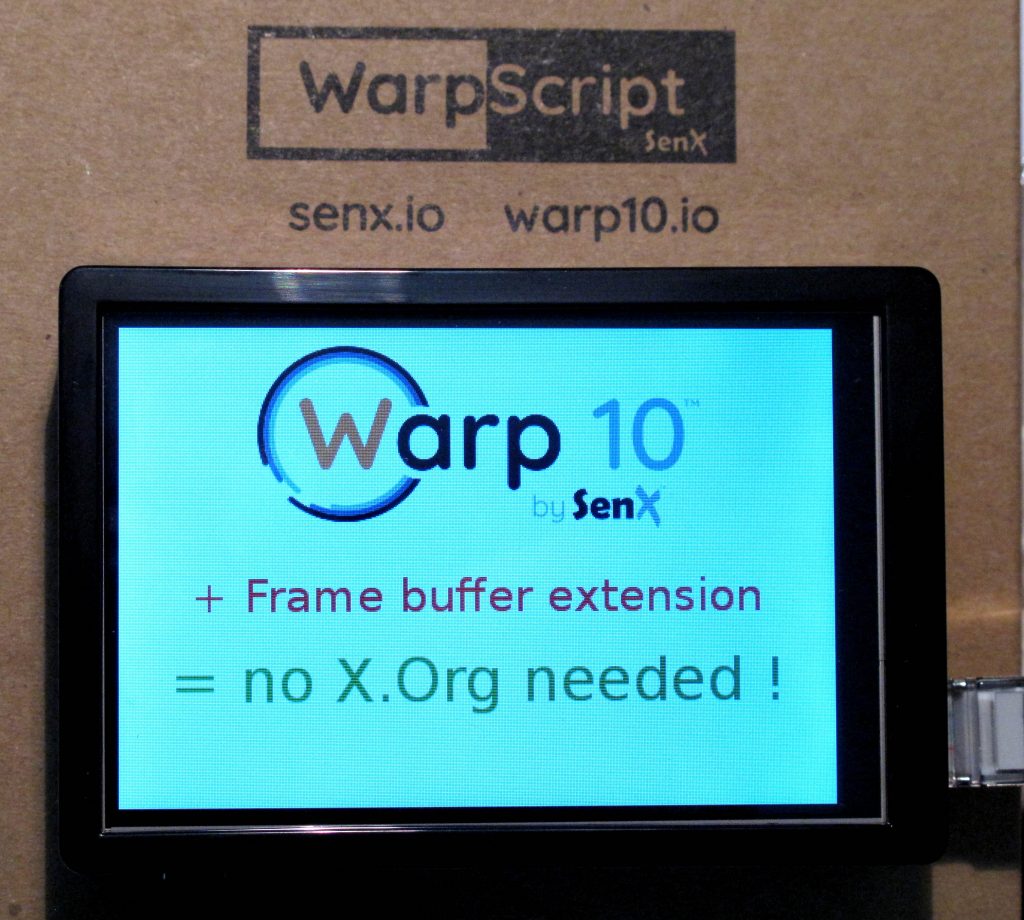

Maybe Warp 10 could draw Processing images in the Raspberry Pi framebuffer directly?

Build a simple Raspberry Pi 3.5 inch dashboard for your BeerTender with Warp 10. Share on XWarp 10 is shipped with a big Processing function subset, everything is ready to handle and generate images. I just need a way to display them on the tiny screen.

Prepare the Raspberry Pi

Installation is straightforward: connect the screen, compile and install the LCD driver.

git clone https://github.com/goodtft/LCD-show.git

chmod -R 755 LCD-show/

cd LCD-show/

./MHS35-show Reboot. The screen now shows console messages:

That's nice, I just have to remove the blinking cursor: add vt.global_cursor_default=0 to /boot/cmdline.txt

Screenshot of the framebuffer is straightforward: cat /dev/fb0 > screenshot.raw

Conversion to PNG is a bit tricky, but could be done with ffmpeg: ffmpeg -vcodec rawvideo -pix_fmt rgb32 -s 480x320 -i screenshot.raw screenshot.png

Playing with the framebuffer

The framebuffer is a raw representation of the screen pixels. 32 bits per pixels, coded in "BGRA" (endianness…). It means that for a 480×320 screen, you need 480x320x4=614400 bytes. That is exactly the size of my screenshot.raw file.

The first 4 bytes describe the color of the top left corner, and so on. Here is a few examples to fully understand how this works:

Write a black line

for i in {1..480}; do printf "\x00\x00\x00\xff" ; done > /dev/fb0

Write colors in the first 3 pixels

printf "\xff\x00\x00\xff\x00\xff\x00\xff\x00\x00\xff\xff" > /dev/fb0

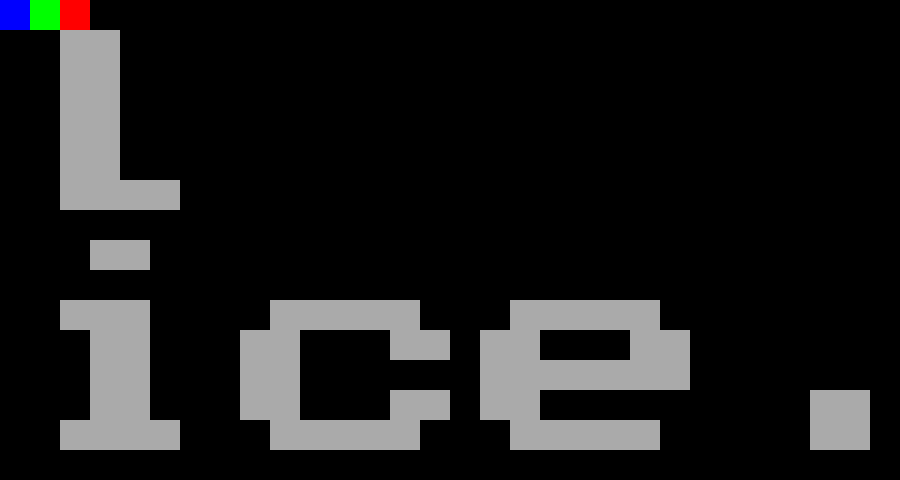

The first pixel should be blue (BGRA = 0xFF0000FF), second one green, last one red:

That's nice. Warp 10 can write directly in /dev/fb0 too… I just need to add the warp10 user in the video group:

adduser warp10 videoProcessing2Framebuffer

It is time to develop a Warp 10 extension to do the main job:

- Read processing image pixels

- Convert processing pixels (ARGB) to framebuffer pixels (BGRA)

- Write into /dev/fb0

The result is a straightforward ~30 lines function :

package fr.couincouin;

import io.warp10.script.NamedWarpScriptFunction;

import io.warp10.script.WarpScriptException;

import io.warp10.script.WarpScriptStack;

import io.warp10.script.WarpScriptStackFunction;

import processing.core.PGraphics;

import java.io.File;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.nio.file.Files;

import java.nio.file.StandardOpenOption;

public class PtoFramebuffer extends NamedWarpScriptFunction implements WarpScriptStackFunction {

public PtoFramebuffer(String name) {

super(name);

}

@Override

public Object apply(WarpScriptStack stack) throws WarpScriptException {

if (stack.depth() < 2) {

throw new WarpScriptException(getName() + " expects a PGRAPHICS and a STRING on top of the stack.");

}

Object fbpath = stack.pop();

Object pimage = stack.pop();

if (pimage instanceof PGraphics && fbpath instanceof String) {

PGraphics pg = (PGraphics) pimage;

pg.loadPixels();

ByteBuffer bytes = ByteBuffer.allocate(pg.width * pg.height * 4); // 4 bytes per pixel

for (int pixel: pg.pixels) {

bytes.put((byte) (pixel & 0xFF)); //blue

bytes.put((byte) ((pixel & 0xFF00) >> 8)); //green

bytes.put((byte) ((pixel & 0xFF0000) >> 16)); //red

bytes.put((byte) 0);

}

File file = new File((String) fbpath);

try {

Files.write(file.toPath(), bytes.array(), StandardOpenOption.CREATE_NEW);

} catch (IOException e) {

throw new WarpScriptException("Cannot write file " + file.toString());

}

} else {

throw new WarpScriptException(getName() + " expects a STRING to specify the frame buffer path on top of the stack.");

}

return stack;

}

}

The full code is available here.

The first WarpScript:

// @endpoint http://pi178:8080/api/v0/exec

// @preview image

480 320 '2D3' PGraphics

0xff Pbackground

'data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAJYAAACYCAYAAAAGCxCSAAAABmJLR0QA/wD/AP+gvaeTAAAACXBIWXMAAC4jAAAuIwF4pT92AAAAB3RJTUUH4wgUDhsdc6HCzAAAIABJREFUeNrtnXt8VNW59797z0wSkpBAMCQE0gAa7mJQqQESuhXUWrXWVqW02lpP7Xis9vKeWjz1Pb45p616bLW2Vo9zikfbV61Hq6c3L21VRgXRIIpcJNwKRoSQACGZQEKSmX3+2HtwZu+1Z/aeaxLm+Xzmk3xm1lp777V++3me9aznAjnKUY5yNFxIyk2BJbkAt/5XjpirEBAEBvVPjnLAinpuj/4pBRqBeuBMYDYwzsFY/cBWYAPQDLwO7AEG9E8wB7ORT8uBl3ROE9I/apo+Yc62E7gNKM9N/8ihfwJagZ40AsjJpw84BPwXUJNbnuFDU4CngI+GCJDifTqBdcBnczrW0KMS4E7gEuATyQyUl5dHWVkZpaWlFBcXU1hYSH5+Pm63G1mWUVWVYDBIf38/fX199PT0EAgE6OzspLu7O9nn6AVW6c+yOges7NEXgG/rircjmjhxIlVVVUyYMIFTTjmFsrIyxowZcwI8xo9p0iTpxF9JkpBlGUmS6Onp4fDhwxw6dIgDBw6wb98+PvzwQ/r6+pze4n7gl8BP9A1ADlhppjzgOn3Ci+1yoerqaqZMmcL06dOprq4mGAwSDAYJhULpm1hJwuVy4Xa76erqYtu2bezYsYO9e/fS2dnpZKgHgXuAv+eAlXoqBv4BuM9O49LSUmpqamhsbKS6upr+/v60gsgJ2DweD319faxZs4bNmzdz4MABu92f1Tcje3LASp48wDXAw/EaFhQUUFtby6WXXkphYSGDg4nbLkXiLxZYEiVZlvF4PLz66qusWbOGQCBgp9ufgS8D3TlgJUaNwGvxFnX06NFcffXVVFZWJsSVwnqSy+WitLSU0aNHU1hYyKhRo8jPz8fj8SDLchToBgcHOX78OH19fRw7doxAIEAgEKCvr+8EKJ2AM3wfg4ODPPPMM2zdutVO/4eAG/XdZQ5YNqgMzZI9PlajuXPncvnllzviGKqqIssyEyZMYMKECRQXFyfNday43cDAAG1tbezbt4/e3l7H9/n+++/z9NNPx2s6AHxRF5M5YMWgX+uiz/K+lixZQn19PXl5eXHf6lAohCzL1NTUUFVVhdvtxu12O+YmyepV4c3C4cOH2bNnDz09PbhcLlv99u3bx8qVK+NdphXNhhfKAcvAgIC/xeJSn/rUp1i4cCGjRo2KKfJCoRAFBQVMnTqVsWPHUlBQkJBoSifQBgYG6Onp4YMPPuDgwYO2QLZjxw4ef/zxWM+hoh0d3ZkDlkY/B75l9eOsWbO46KKLKC0ttQRUeLJramqorKykuLjY0gaVIAX1j4zm8ZASkmWZ/v5+Ojo62LNnD8eOHYsLsjfeeIO//vWvsZqsBz4FHD1ZgVUErAHOENoXiou54oorOO200yx3eKFQiOLiYqqrq5k4cWIiYFLRvBK2AC3ADn07/xFwQFGUmFtLv98/DqhCs/ifCswAZurPNNbJjbjdbg4dOkRrayvt7e2WAJMkid7eXp599lm2b98e60W4SJcCJxWwFuqgElJ9fT2f+9znLC3WoVCIkpISpk6dSnl5OcGgbc+UfcB7wAvAHxRFaY0ACYqiJPxAxv5+vz8fuBi4HM0dZ5YTLtbS0kJHR4el0u92u2lpaeF3v/tdLMv+D4HbTxZg3QD8h5CFFRVx/fXXM3bsWCHnUVWVgoICZs+ezZgxY+yaF3YBzwA/UhQlkAoQJQG+r6IdQ03TOXZMvUpVVTZs2EBnZ2dMgK1cuZI9e/ZYDbVOf5EHRzKwHgW+Kvphzpw5LF++nIGBAcs3efbs2ZSXl9sBVAC4X1GU27IJpFhcze/3f1Z/wSrQvFStFbxgkHXr1llyJkmS2Lp1K0899ZTV3PShOS8eG4nAWq+LBBNdf/31TJo0yVI/mjRpEqeddpodfeld4AJFUQ4NFTDZBNkvgJvj9Tly5AgbNmyIyeXuvvtujh611NvHAkdGErA+QODSIssyK1asID8/35LNL1iwIO5OCe2g9hZFUY4NJQ7lBGB+v9+N5vrzP/H6tLS0sH//fqF4lCSJJ598kq1bt1q9fLW6ejDsgbUfqDR+WVFRgdfrjTouiVTOa2trqa6ujjf23cAdiqJ0MczJALAlwIuxOFMgEKC5uVk4f5IksXbtWl580XKIeuCtdD6PnOb5aheBqq6ujptuukk4KW63m/r6ej7xiZg+e08BFYqirACGPaiASA47qCjKX3S963oru11xcTGKojBu3Djh74sWLeLqq6+2utybgDJcgdWOIIBAURSuvPJKk4lAVVXKyspYtGgRhYWFVvrWbuCTiqIs08cfNiIvAYCFFEVZieYh+6TVhmbu3LnU1taalPZgMMi0adO44YYbrC61igScJLMpCmW0yJQpxh8uvPBCGhsbTQbPYDDI1KlTOfXUU2Pt+H6oKMrtw0l/SrGovEDn1KWi37u6utiwYYPphZQkia6uLu69916roU8HNg8HjvWKCFSXXHIJDQ0NJlCpqkpdXR1Tp061AtVuYPzJDCqd/qooyhjg96IfS0tLaWhowOPxmOa3pKSEW2+9Vah66Lv1sqEOrIfQzqlMoDrnnHNM4k+SJBYuXMi4ceOsRN8fFEWZCnSMRLGXiIhUFOVy4AoEBk+Xy8XixYsZNWqUqX9BQQErVqwQgSsPze05pdIrlYP9HzTf7ChaunQpixcvNnEjWZZpaGiweosArlIU5WlyJNxBAqOBA8AoEcCam5uFHqnd3d1WYnEfMHGocazzRKCaO3cujY2NQlA1NjZagWoQGKcoytP6BOZIwL3046kitLNPk846f/58xowZY+pbUlLCddddJxq2CvjDUOJYkg6GKJSMGzeOm2++WWhjiQGqvcBkRVFy+Q6ccbD70M4gTXP9zjvv0NXVZfq+ubmZ5557TjTcp4G/DAWOtcU4jsfjEYIKYMGCBSJQqcAbaNb5HKgcikVFUb4D/KPInjVv3jyKiopM39fX13POOeeIhnwRGJNtYD2E5n8UJea+973vYcWe8/LyROO8oCjKIkA9mRX0RMWiDq6HgK+J2syfPx+3221aj4svvtjqdGNnstIsGWDVAl7jl8uXLzed/QWDQerq6k4ELxjoJUVRLj7JTQmp2jE+ioW1ftGiRabvQqEQ11xzjaj5OJJ0cU4GWJuMX8ybN4/p06eb2G5tbS3l5eUik8IGRVHOz4EqpSBbqe/QTXrVggULTO3z8vK48cYbRUOtAOZlGlj3A1FsqbS0lGXLlpl2gGPGjKGmpkZk/GxVFGVeDlRpAdfPgDuM3+fn5zN9+nTTC15ZWUlDQ4NQRckksCqAm4xfXnPNNSZHNEmSqKurE3GqLkVRanKgSiu4bkPznI22KVRVMX78+Kg1CYVCLFmyRKT/VgA/zhSwTI4+S5cupby8XCjXLVxqJ+dAlZHd4hVobktRqskZZ5xhss5LksQtt9wiWq8foBlj0wqs8zBEn+Tn56MoiokrTZ061bQT0ekqRVGO5ECVGYUemG78bXBwkHnz5pmO2DweD2eeKXTy/XM6geUGXjZ+ee2115oOlj0ej5U/1UO5Y5qMAywAmPy6w0lUjAzhsssuE3n0LgYWpAtYpq3DzJkzmTjRfLxUX18v6r9DUZR/zB3TZEUk7gL+r1EkTp482WQ8DYVCLFu2TDTUY+kAVgFaxLIJ3ZG7PVVVmThxopWP+tKcXpUdkajP+4/RvUQiQTRnzhyTSAybhww0Fe24J6XAWmH8oqGhwaQA5uXlMWPGDFH/mxRFac2BKuv61mkYEocUFxeb1JZgMMjy5ctFQz2RamA1mbT4884zbVlra2tFUckdiqI8kBOBQ4K60XKbRq2byMmyvLycU0891dh/LHB2qoBlCtEWeSeUlJQwYcIEUf+EjaCqz953I5lS+by6u823gbaoXZnbzcyZM01c6zOf+YxQbcPGOaKdzClRbgoul4vzzz8/CuExuNXziqJ8lMhkStop5GjVx+VoGZIHdAXyRaAvos1IJ0n1cSrwHbTz2XeAn0te2hKZA11yXEtEeJmqqlRWVrJt27aothUVFdTW1rJjx47Ir4vQzhIPxrzpOPfxJeDxyC/mzJnDFVdcEdWotLSUs846y8hOVTTvxuNOuFV4slQfV6IFD4joIslrHXc3grjVKLQiA6KI3j9JXj6b6Avm9/tXA1En0x0dHWzZsiWq3d69e0WJ39bHE4nxONb3o1AoSSxbtiyKM1nJaODniqIcd/x6aqD6plEXMNALqo9zAf9I5Fq6+Msndq6FS1Uf70pe5wfFOtf6BpovXZReFU5GEqYpU6Ywfvx42tvbI5ueheazdSQRHSsfQ+6qiooKE4CKiooYO9aUCuq4oijfTXBSq+KAKkz3jFRRqD/X5Taa1qk+vu5UD9N1rfeBPxl/mzIlOsBqYGCApUuXioZZnqjy/ojIbhWJZlVVmTZtmohb/SaJef2SzXZnjnBJeJ/NdnclKApBSy0ZRTU1NaaN2bRp00RDPJgosC4w7vpqaqILVqmqKuJW/YqifCOJCZ3mgLvdNtJ2iaoPVB8T0TwL7FB+EjvETWgu4VFragzCkGWZxYsXi4aZ4RRYlRiKQU6fPp3+/v6oRrW1taLT8NeSnFsnuTOvGWniUH8eJ7aZhBOt6lzLZPyeOXOmyUZpcTh9rVNg+UQINyK7srJS5Gv11SSV1rUOutSOUDF4q4O2+5O0a63GEMCSn59PYWFhVNuysjJREMwKp8BaatwtGEWex+MROYZtURRlX5Jv6zMOusiqj5tHijjUxWA5MMdBt5tScOkovh8KhaioqDDp0xdeeKGo7zy7wHJhiK6dMWOGSQzOmDFDxK1+nezRjeQliLPAyR+MFHGoP8dlTtQGyZtcZmR9vYS7QyOw5s6dKxriQrvA+i4Gw6lowLKyMhFr/UmKDpofctB2XIQYHQn0NQdtdyR7MV0ctmMoWydJEqWl0YltwsUY7OhZImDdbhR5kyZNMu0SBPJ2VQon18lYHtXH8kxyrXSBWLe0L3TQJZW74q8bxaExWYssy5xxhikt/3QEtSON6CjA4N88a9Ysk4eohXfo71IoEo47FIe/SONin6L6eEn1sUv18XfVx2osclSlAKxfdygGn0/FC6WLw7eN30+YMMHkb3fuueeKhjjfDrBMJgXj4bIFsJ5I8Vw7CZgcC7hSDS7Vxw1oznFL0BzdpqCdrx1RfYnvfmPoV1c56LI2VdeOSDLSG/l9UVGRSfyVlJSIpJUSD1gmxdHoeuxyuUQD71YUJdVpnjc6aOsCzk2VONR3Z2diUehAp0dVX3R6gRRQg4O2P08Dl46KRQwGgyZdOlz/2kCN8YD1D8YG48dHF+SyiLxJeYCE5KUXZwGTv0ox97Aj2p9PIZBvdtBlQPLy5zTolVHu5+GTFePu33ieKDI5yLGQJ9pyGoH2sZj2kwb6joO2k1Ufo1PxFqs+ShCku7S45vRkr6kD5CsOuvwP6aGjaH5vUaLPeBZ81llnifqeZgWsMXb0q8rKStGg76bJn73VYfvZKXqL/+ig7XUpuqaTQ/VH07RZCaGVRzlBo0ebY1VnzRLWm1psG1hVVVVRHEuSJEpKSkTKX1s6nlLy0ge85KDLL1N06XoHbb+fAjF4HQ5C8SQvL6TDvKIzhxeM4tBoz5IkyZREF0PcYeTDmCyeRrR6PB6Rtf0l0kvXOmh7lupLLmmY7mSY77DP8kQ5iA4QJ+Bcmeb5/m08YKmqKgLWFCtgmSJdjcGMFvWUX0nXE+qLtd8o9+PQ6UlcTwbuTUQXTJKDTHbQ9ok0nzL8wQgio5SyANZEK2DNjwcsC461Ll1PKHlB8hICVjvo9i9JXHIiWnpqp/RJ1ecIHJFg/qwTDil5WZWuUwY9mkoFolzK8/PzTesu2MRVWgHrDCOoIu0VqqoKFTkM0bVDQByer/ooTPA6yQRo/CDBfj/Lgg4ZS8cCg699Xl6eCVhVVVXG7mOsgDXLiEjjYBapHg+n82H1KJRWtGBL23OUgAI9BpvldS3oS06vqdMkB90eydBhe188SVVRIXRwzRMBK0oEGA8gVVUVVjwgzdW3Itj+egfdvp7ANZIVMEWqj6vtLrwejXSOQ9H7boYO2+MC65RTThH1m2oElunhjDsBwApYPWSGbnTQ9nInnEP14QLuSsE9ft/hwv+Xg7a/kLyJuyE70bMwREq7XC7TMY6oOEEk9w0Dy3T4XFJSYkKpqBKqoiihTKBK8tLiRJ9TfXzBAbdanKLbPF3fWdoBc75D0XtvJsSgrme1GneBRm9hC7Wo3AisfDvcSXBOOEBmyUlV0OUO2j6Ywnt83CaYT3M47t4M+py1x1t7ixSgY43AMiHGuBOwGCiQqSfV39bbHHT5gk3OMZsYYUwJ0KcNyrkVOeE/D+ou25miLhtMRYSJIiOwTJnSbBT4Nil56VbiJS8b0Spe2QXj52MtsM4BvmlzOLt5OMeoPpRY3EX1UYQhb0Ic+n8ZlgzH44FIVVURsEy7QpXhQ05sTV+Ls8Aygho0FiC8FGwHLjweh2M5FYOdw2RtQkZgmRRwozuyBRVk8q71xfqFgy5L4/z+LZvjhN1U7EZ4V6KlH7Kif3Wyc8ywGBSuq6gksOAUZsAILJMS3t/fb7K8C6g0k0+ri8N3gEN2J0j1cUGMBbbr7/XPuqF2D/bsdjIWSUv03aCTEK9vZ4HzjLHDaASY6DECq9/Y4tgxcwadgQET/lxkh37toK0p7lBX2huBGhv910petkWMcZHN635X9eEWgPpsB/d+VPJmzE4YSePjrb1F/e5OI7B6TdDr6TEpZ8ePm9Nd+f3+jIJLX6jHHXSps1Da7YqjH4fBof9dh/2airUCruXEaPBMpuMldQNpjVHsGYHV0yPE+4G4ovDIEXNshLFWjk6jM/ngEeLQ7ptcqvo4W7BA59ro2yN5eS4MDv3agwjyslrQs4LvrnHwuN/IdJS3biAdbxSDRrHX2SncT+w1AgsMJ9oHDx6M4liSJAnFY6b1rAi620Hb+yMXSPXxnzb73SDimJKXO0RbcgHNUH0fu5OoPnP8XQzar8dXZoMKjMAySq+DB4UpSHeLgBVVfKm9vd00mAX7K8v0U+vcx0lAweyIfgCft9nvKSOniwCoXR+xL0f87+Q04HmyRwVG/cqIhQMHhObEoAhYGyJb9Pb2RgVSSJJEIBCwpehlSBxutsk1QMu+fKruUXARhtxfFnS75GUghiiyq8T/NOJ/J3kZvpPpeY2ItCo06tZGYH30kSkZ9iHjtjhM6+LtDEXIxVngQarpnx20DfuK21WHfXGs9gMISuxZcNhG1RedITEO/T0bu0G9PEoBBm8XEbAOHTJZfNqsgNVs2usePUo8WYsWfp5x0hf99w66NOhJN+w41r0ieWmPY7UHuM7mtW8DrnRwry9k8WW9zLgj7O6O9rEU7RKBj6yAZYKgUfRZAKsxG0+vi8PdDrb+buA57FVn/7LN678J7LEx3oXAMgeP929ZBNbVUQCRZbq6om3Csiyb8qUBu6yAZbIv7N27NypPg6qqQjOE3++vzuJE/JODtnZMDF2SF1txkjrXsuuHbtcss03ymt1WMqhjRR2DqapqAtbAwIDIEv+GFbBMPuU7duyIApYkSbS1Ced8XjaKMOkL+1iKh7Ut2nWudQ8wmMLrP5NFULkwnKYEAgGTlNq8ebNoiFetgAWG0/vW1lbToB0dQifOJdkoGacv7KEUDtkLvJtAv/tTeA8PZQNY+vqNBqICBru6ukzZhd555x3REB/GApbJB9vIoSy8Hq4gu/S9FI1zux7H6JRr/muKrt8ieaMXKJu7bEmS6OzsNDkj7N2719jP5NlrBNZfTKq+wV4RCoVEVb6q/H5/VRYn5AEgWd/7Y5I3yubkhGt2AU+m4Dn+cyi9oC6Xi8OHo6P7VFUVrf/qeMAyndls377d5E26Z49wI/TFLBa7PJ4CPecvSep6P0nBczybjSS9fr8fv99fYsRDd3e3affX0dFhK82CLFigqIT0W7ZsMfk7C6yuAF/IVmlePSyqKclhrkzi+uGD8W1JXP/vkpcPspFaXF+384zf79+/P4qpSJLEK6+8YuullOPZUFRVNXGoUCgk8sdZ6Pf73WSJJC93JiEO30yRl+aNSfT9YZbFYBSvlGXZJAaDwaCpWCbwHgJbomxxgShet3HjRru7w3/J8uQkmkfihhSJoGRSkr+URTE4AYGrjNFA3tvbKxriUdGXImCpRl2rpaXFlLZGgFxwmL8glaQvygMJdN0ieXkvFSJIF8nXJdC1TfJmNG7QKAZNasDu3btNblMWZoa/2QUWGFIldnZ2mjhUMBgUmfVP8/v9U7OhxOt6TiLi5Kep4hT6OIkYOL9FdunnRjHY0dFhklIvv/yy8MV0AixTNMqqVdFcXpIk9u7dKzo7/G22lPgwM3XQ9qjk5dFUcQod3N0488kH8GerZIvf7zc5H/b29po8Wywc+yw9aa2A1YMhMHTnzp0dxt3h7t27RUr8/Cy/ff/fQdsfpekenKTWPiR56chioSlTBsPNmzdHWdtlWeatt4TZDZ50Ciww5MXs6em5aXBwMCrfqMiABkh+v/+P2bJpSV5+7KD5PengFJKXAPCBzebXZWOedKV9IYYSdi6Xy+QpPDAwQHOzyauqnxhFomIB6zdoLr1fAmYCTyHwaty6dasoHP8i3WksW2/h23ZAFcdDNFmyW2Xib9kQg7q6Yiors23bNpMB1GKjdnXMl8vpDTU1NfUTcVAZCoWYN2+eqDb0U4qiLMv0hOmLNFe3r1jRMTT35L50AEsPuED18TSxz1F/Knm5JRvcCi2Z8RvG31599dUoYOXn53PnnXeanP3QDqx7EuFYVnSpcQexc+dOEde6yu/3j8rS7nAjWkUqUX3p9WiFvPvSxa0iwsWuxNpf62eSl1uyyK1MV963b5+QWwlA9Qpxwu8kh9wKnVtF2RlCoRB1dXWi4pivK4qymCyS6uMraM5rAeBxycsbYY6SwXso000KM4DNwH2Sl55M30cEt7oK+O+oe1RVVq1aFaW0ezwefD4fra3RedjQQvC7UyoKdYBdj+EkvrCwkAULFohOvmcrivI+ORoy5Pf7DxIRqSRJEq2trezaFeVdTFtbGw89ZHIP68BGZJacAKhAq7QVZd8/evQoH34odCXaFPGm5Ch7YAr/fQJD+NvAwADbt2/HuDt87rnnREPZqgArJXqjTU1N1wKPGG9GURQR17pVUZR/zy1v1sFViiG2QZIktmzZQnt7tJv9Rx99xK9+ZarU167rp3FJThBUNDU1PYrh0DcYDLJxo7B+5V1+v39ujmtll1shqKbW3d1t8hJ2u90884zwZMp2ioBkgAV6vs0oAdzRITpDDCvyOZGYBdLn/bdAiXFH/95775l82tetWycyfO/EQdVbKdmbbmpqWmOUux6Ph4aGBpGn4dOKolyVW+qMc6x6DDWkJUli165dfPDBB1HnvXl5edx+++2i2IapRCT9SAvHMijySzD4b4mUQZ2u9Pv9N+aWOnMi0O/3j0FQmDwQCLBnzx6TE8FvfvMbEaiedwKqlHAsHWDfxXyYqS5evHinLMu1gi5nABuz7AVxMuhVLrTQ9wojt1q9erUpTP7IkSPcd999ouGKEMRDpI1jRQDrZ5hr3UjnnXfeNATZAtHyRBTn9K30gUp/af+MweYkyzLvvvuuCVSqqlqB6hanoEolsAAuiLiBAT5O4C9yo8nXd5TuHAzSAyq/3+/TN1dRUqm1tdWUjU+SJJ5/XpiO6wNwHhKXamAdbmpqKgJcTU1NeXycJnEL4nRDBUBnNgMwRjCoforAWbOvr4+dO3ea9KrW1lbWrRPWM/10ovcipfLBwmaICHNE5MM+grigZQdaXvRQTudKCbh+giAyXFVVXnvtNdNO/dixY9x9tzDr5jdJosaQlOE36TngM4Im+9BrCufAldT8/hJBCRdVVXn99ddN3r4ej4e77rpLlKmxFXupytMrCuNR2DCqKMrFuuJuNHBV6bsXd06hTxhUj1mBau3atSZQ5eXl8cQTT1il/5yb7H1JmZ4EfQu8EXGtvoNoVegP6EWvc5ScJKC5udkUGCHLMq+88orVKUg9zsr3ZY9jRXIutKjZ0xHn7xyHliu8Kse5bJHH7/e/YwWq9evXm0AlSRLvvfeeFaj+PRWgyjiwIsAVUhRlFoZMzToHdQH35XStmFwfv98/E82Lc55I/K1bt04o5lpbW60OmN8Ebk3VfcrZmJwInWsesEbQJCcGY4u+64D3EdTyDgaDrF271pSYGLRMxw8//LBo6A/RfOAZ1sAygKuBaDdZFbgpByMh5fv9/jcBIToGBgZYvXq10Luku7ub++8XJh7sAT6R6huVszlLYXGnKMoX0aI+FiqKIkN2krsOYbGH3++/AK2i7Tmitt3d3axZs0ZY/u/IkSPce++9VpeZlI57l4biZOb0q6j5kHRRNdGqzbZt29i/f795cfU0CAJP0DA5coUZ6sCS0Oohr87BJi6o/hstLlEoWVRV5c033xSW+3O5XKxfv57f/96yxsJMoCWdi5xJ+jIfp8/uRwvLej0HIROgfoJWq7pIqL/IMm1tbbS0tAgLUubn5/Pss89anf+BFoa2Ld3cI5MUFXakUxOpyzo8nHd6LuAutKyAhVbtQ6EQmzZt4tChQyaX4jDoVq5caYwFjKTJ2M8rMWyA1YXB71qnPwKXk3zm42EFJv3/OrTsNDGTg7hcLj788EMrz1wkSaK9vZ2HH37YqmBpF1CNFrjLSAPWjVhn3evVbSnvnQRgmoAW8fJvxDnslWWZI0eOsHnzZmEVLtCiat5+++1Y+tQWDFll0k2ZNjc8iBbeLaJRaJb4pnj3FXbJMf4/FE0F+v8yUO33+7/k9/t3oXlz/DoWqCRJore3l7fffpv169fT399vApUkSfT19fHggw/GAtXzmQZVNs0NFWiuGXkWv/cA0zCkBo8AUxFRYksuAAAEHElEQVRaNa1dwFo9A04ieo0MSIqiBJPlQgZAjdI/9bqYc+Qwd/z4cTZt2iSsYxNJO3fu5LHHYpYS+j6pyT8/bIAV5pbvA9NjtHkeuNgAKlM0L1oq6yYgZHQ0jAGKy/i43mGbfq3X0M7MdqKdAKhx5k4FytHcrxvQ3LPPTHRCAoEAGzduFNUCNAHvgQceEGWBOWGJ0JX01mwt7lAwkC4Hnog1j8AK9ASsTU1NQmc2tMoUfwc+39TUtCUOqEwZc0QbsIiPapgzSX8xXElNvs6Ntm3bRltbm9B0EEl5eXk89thjbN26VWhh1+k54JJsL+pQsbyfoivtserxHAK+ec8993wiEAjEq2B/BM3945ampqZNYV0swl36YrQIlsyzaVlGVVV2795NW1ub1Q7OZJf605/+RHNzs1WRrDApGMq7nezACpOX+GXV9t18881V48ePFyUfsdpmbwB+Bzza1NTUo4Mr7R4UkiQhSRKyLHPw4EHa2tro6uri6NGjJ36L1dfj8fDiiy/S3NwcD4DPoBUyDwyVhRxyZ4VocXCPYOG8FqZp06ahKAqTJ0+2yhVhRe3A22VlZVJ1dfVFJSUl5Ofno6pq1MepOAsDKBgMEggECAQCdHV10dPTQyAQQFVVoUFTZDo4duwYq1atorm5Od7L04mW/P/lobaIQxFYYVoC/AdQG6tReXk5jY2NzJkzB4/HY5eLaUqUXhPI7XZTVFTEqFGjKCgooKCgALfbjdvtRpblKECEQiFUVWVwcJDBwUH6+/vp6+s7kRu9r6/vBMhicSSjeHS5XOzcuZO33nqL99+Pm6duEO204kdDdfGGMrDCdIO+44ubl6muro758+dTVVWF2+12BDLTtiqCa0X+bywDkoyuJcsy7e3tbN26lZdffjmu8o7m1v0E8JWhvmjDAVhh+g5aEagyO41PP/10GhoaKC0tpbCw0LGIS5e+1d/fT09PD5s2bbIqIWK1M34e+PxwWazhBKwwXay/tSV2O3g8HhYtWsTZZ59NQUEBLpcrKW7jhILBIMFgkJaWFlavXm3KnBeH+tByvX57uC3ScATWCfUKzcC50CnnABg3bhyzZs1i2rRpVFdXC8VeIgp8Z2cn27dvp6Wlhd27dyfKKQNouS9eYpj6/w9nYIWpCO1A9zEs/JfskMvlOqFEhxXvUaNGUVhYiMfjOaHADwwM0NfXx9GjRwkGgydqJIdrZScpbu8A7tZNJDkaQjQRuAbN30gdJp870I615NzyDQ86BbgIrQJD9xAC0gs6+KeN5MmXTiKgVaD5e31S/5yFVmEhXRQC3kUrGPUW2uH21pNlsk8mYFnRXLSQ/xloHgHjdMCN1nW2fLQyL+G5GtS3/726kt0FHEbzsdqG5lS3gSF0vJKjHOUoRznKUY4yTv8Lqx6FKjE4rrkAAAAASUVORK5CYII='

Pdecode 165 120 Pimage

'CENTER' PtextAlign

0xffff0000 Pfill

50 PtextSize

'Hello World !' 240 100 Ptext

DUP

'/dev/fb0' PtoFrameBuffer

PencodeAnd the first image:

Get it from WarpFleet!

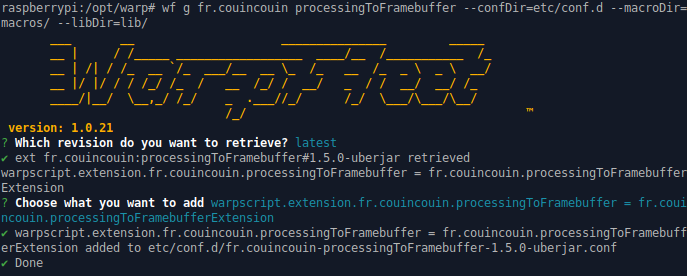

I published this extension on WarpFleet. Installation is really easy once WarpFleet is installed. Open a terminal into your Raspberry Warp 10 directory, then get it from Warpfleet:

npm install -g @senx/warpfleet#install extension

cd /opt/warp/

wf g fr.couincouin processingToFramebuffer --confDir=etc/conf.d --macroDir=macros/ --libDir=lib/(How to publish an extension will be my next blog post)

Performances

Since Warp10 2.1, timing is very easy. Surround the function by CHRONOSTART and CHRONOEND, then call CHRONOSTATS to get the result.

'Framebuffer draw' CHRONOSTART

'/dev/fb0' PtoFrameBuffer //display!

'Framebuffer draw' CHRONOEND

Pencode //also return a base64 png image

CHRONOSTATSPtoFrameBuffer lasts around 25ms. Nice!

Video in a GTS?

Since Warp 10 2.1, you can store binary values in a GTS… It means you can play a video stored in a GTS. Because why not?

| Video: Etch-a-Time Series: a RaspberryPi, a laser, and Warp 10… |

Extract jpg images from the video, encode them in base64 to create a GTS input format with binary in the value:

1// imagesequence{title=introEtchASketch} b64:_9j_4AAQSkZJRgABAgAAAQABAAD__g...

=2// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBAQEBAQ...

=3// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBAQEBAQ...

=4// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBAQEBAQ...

=5// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBAQEBAQ...

=6// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBgYHBgc...

=7// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAICAgJCAk...

=8// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAICgoLCgs...

=9// b64:_9j_4AAQSkZJRgABAgAAAQABAAD__gARTGF2YzU3LjEwNy4xMDAA_9sAQwAIBAQEBAQ...In a WarpScript, FETCH your data, and feed a simple decoder:

[ 'readToken' 'imagesequence' { 'title' 'introEtchASketch' } NOW -1000 ] FETCH

0 GET

SORT VALUES

<%

'iso-8859-1' ->BYTES 'imagebytes' STORE

$background

$imagebytes

Pdecode // decode jp

0 0 Pimage // past it on the background

'/dev/fb0' PtoFrameBuffer // display!

%> FOREACH

You can handle 10fps without any kind of optimization.

Conclusion

Warp 10 Processing functions allow drawing any kind of images from within WarpScript. Display an image on tiny hardware doesn't require X.Org or any kind of graphic acceleration. Just push images in the framebuffer. Writing a WarpScript extension to print an image object to the framebuffer is a one-hour effort that saves a lot of time/cpu/ram on your embedded hardware.

If you like this, star us on GitHub!

Read more

FETCHez la data !

LevelDB extension for blazing-fast deletion

Introducing WarpFleet

Electronics engineer, fond of computer science, embedded solution developer.