Data deletion in levelDB can take time. Discover the levelDB extension to speed up deletion within Warp 10 standalone!

Data ingestion is something every TSDB can do.

With some databases, you cannot write in the past or future, sometimes you cannot overwrite data. With Warp 10, there are no such limits in the default configuration.

Data deletion is another challenge. On some TSDB, you can delete time chunks easily, without being able to select exactly what series you want to delete. With Warp 10 standalone, you can select exactly what series and what time range you want to delete, but this has an I/O cost.

Let me explain why, and introduce a solution.

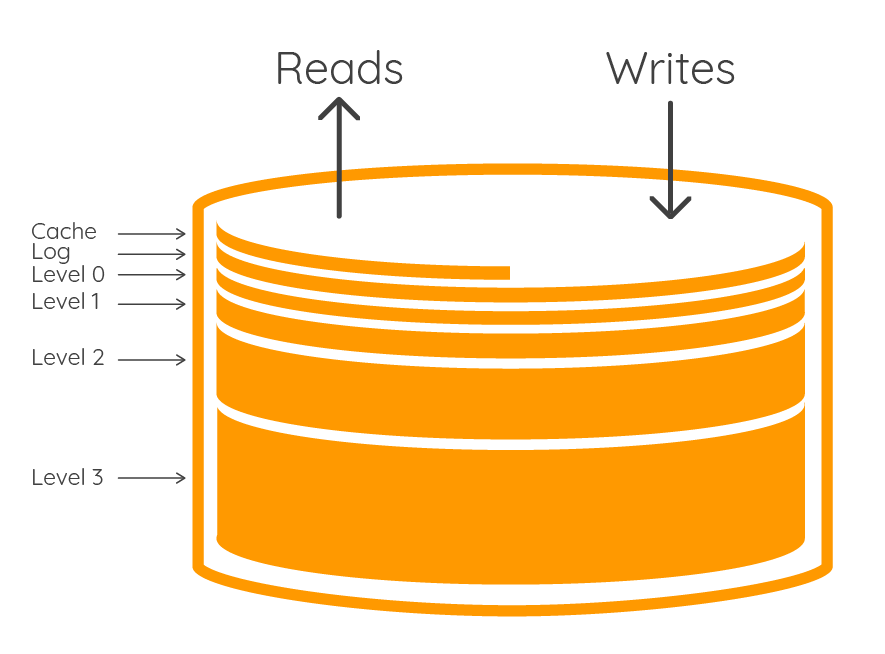

LevelDB deletion process

For new readers, the LevelDB mechanism is presented here.

As an LSM trees backend, LevelDB handles deletions by writing tombstones to the log file, this means that deletions will only reclaim space when the SST files containing the tombstones are merged at the deepest level.

Deletion with LevelDB is "mark for deletion". It is just a kind of key/value pairs mutations that is recorded in the log, and files are not immediately deleted. Think of it as a "to be deleted" post-it note placed on your data. LevelDB will procrastinate the task until the next compaction.

Every compaction as an I/O cost, LevelDB daemon should read and write new files… It may not be a problem on a big server, but on small embedded devices I am used to working with, it contributes to the SD card flash NAND wearing.

But there is a solution…

Speed up!

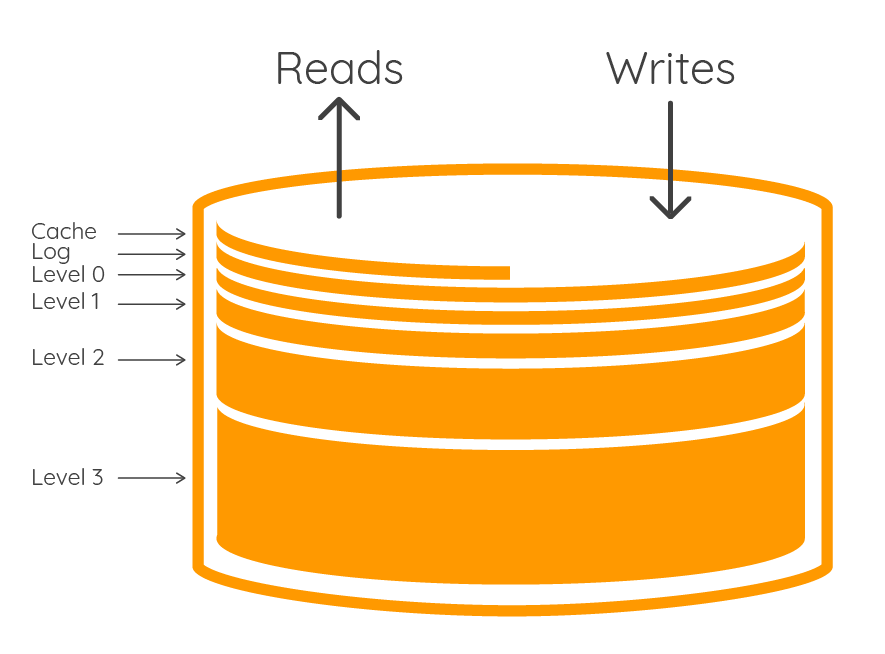

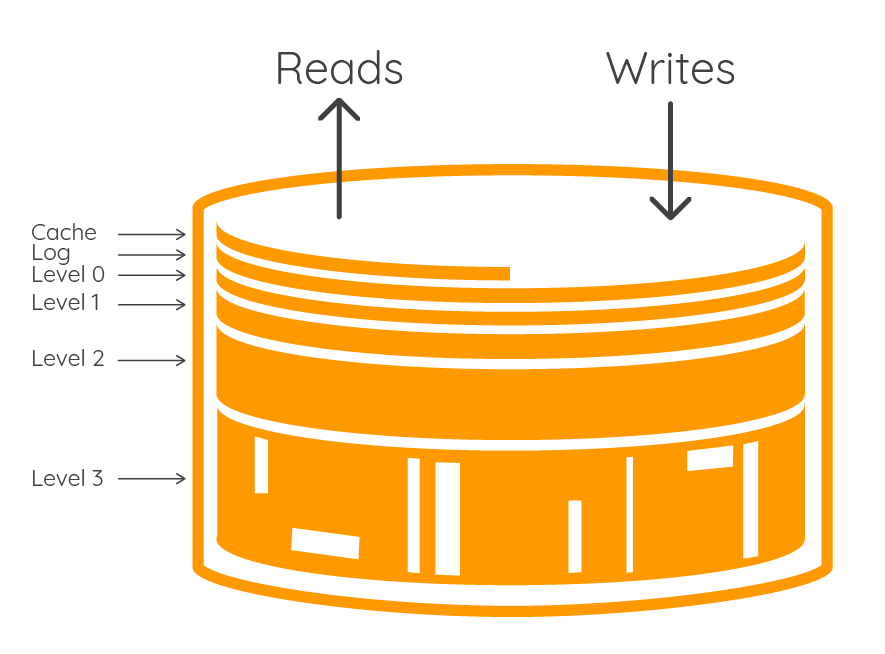

When you reach the last compaction level, there is a very high chance that the SST file contains data for one time series, on a period of time which depends on the compression achieved.

The idea is to identify into LevelDB the files that contain data to be deleted, lock LevelDB access the time to delete SST files. That's what LevelDB extension is able to do, among several interesting low LevelDB commands.

Raspberry Pi examples

Example 1

The system used in this example was used for one week to record data, then powered off a few weeks, and powered on again a few days before this test. SSTREPORT function will return a report of all the SST files content, with their level in the tree, and the max level.

On Raspberry, we use the pure Java implementation of LevelDB. the maximum depth is 4. The following WarpScript will show you the number of files per level:

'mySecretKeyToAccessLevelDB' SSTREPORT

'sst' GET <% 0 GET %> GROUPBY

<%

DUP

0 GET

SWAP

1 GET

SIZE

2 ->LIST

%> F LMAPOutput is: [ [ 0, 3 ], [ 1, 5 ], [ 2, 56 ], [ 3, 689 ], [ 4, 62 ] ]

Before deletion, there are 820 files in /opt/warp10/leveldb/ (1.3 GB): Among them, 62 files at level 4.

'mySecretKeyToAccessLevelDB' // defined into configuration

$readToken // a valid read token to access data

'~.*' // all the classnames

{} // all the labels

NOW 2 d - // data older than 2 days ago

1000 // maximum number of files to remove once

@senx/leveldb/purgeThe deletion macro, called @senx/leveldb/purge, took 480 milliseconds.

After the deletion, 760 files remain in /opt/warp10/leveldb/ (1.1 GB).

The output of SSTREPORT shows that 60 files at level 4 were deleted. The 2 other ones should be data too recent to be deleted.

[ [ 0, 4 ], [ 1, 5 ], [ 2, 56 ], [ 3, 689 ], [ 4, 2 ] ]

Example 2:

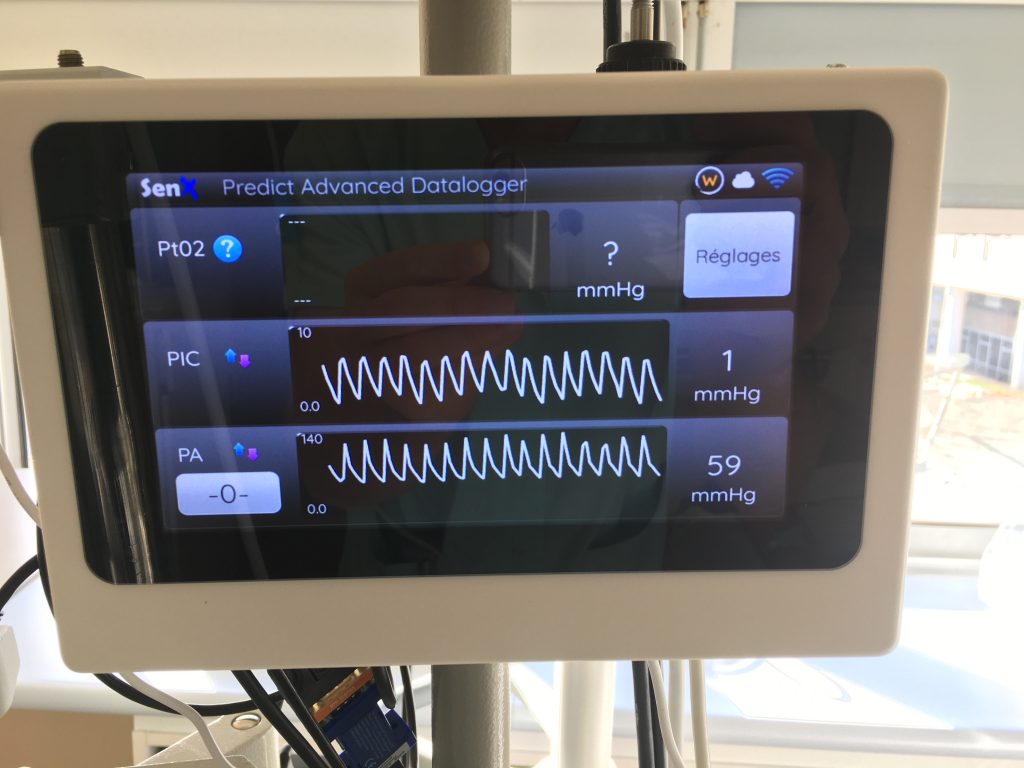

This device is a medical datalogger that records a 100Hz signal for several intracranial sensors. It has been heavily used for one month.

Before deletion, there are 2995 files in /opt/warp10/leveldb/ (5.9 GB)

[ [ 1, 4 ], [ 2, 52 ], [ 3, 520 ], [ 4, 2413 ] ]

There are lots of files at level 4. Note that there is no file at level 0 right now, a compaction just happened.

This time, I will ask to remove data older than 7 days. Given the low speed of SD cards, doing such a DELETE is a huge task that took hours of compaction.

With the LevelDB extension, it took only 2.8 seconds.

After deletion, there are 853 files in /opt/warp10/leveldb/ (1.6 GB)

[ 0, 1 ], [ 1, 4 ], [ 2, 52 ], [ 3, 520 ], [ 4, 270 ]

The 270 files at level 4 should contain data less than 7 days old.

Tips:

If there is a security/regulatory concern behind the deletion, to make sure you deleted everything, you can do a standard DELETE after @senx/leveldb/purge.

The @senx/leveldb/purge will not clear up the directory. Consequence: if you delete every file from a time series, FIND will still return an empty GTS, even if there is no more data. Again, you can do a standard DELETE with the "delete all" option after the SST files deletion to clean up the directory.

How to install and test LevelDB extension

This extension is not part of the open-source solution. If you want to use it or evaluate it, we will send you an encrypted jar file with a license key.

If you want to evaluate it, please contact us for a free short time license.

Copy the jar file into /opt/warp10/libs, and create a 99-LevelDB-extension.conf in /opt/warp10/etc/conf.d. This configuration file must contain:

leveldb.license = SecretLicenceKeyGivenBySenX

warpscript.extension.leveldb1 = io.senx.ext.leveldb.LevelDBWarpScriptExtension

warpscript.extension.leveldb2 = io.senx.ext.leveldb.LevelDBPWarpScriptExtension

leveldb.secret = mySecretKeyToAccessLevelDB

leveldb.native.disable = true // for raspberry!

leveldb.maxpurge = 1000000 // max number of files to removeConclusion

The Warp 10 HBase backend has some built-in TTL for data. But if you manage heavy-loaded standalone instances, or if you use limited hardware, you know DELETE can be resource-consuming. This extension is tailored for you, and you will find lots of other useful functions to repair LevelDB in case of a production problem.

Discover more commercial modules here.

Contact sales team for more information.

Read more

Measure your home heating oil consumption

Leveraging WarpScript from Pig to analyze your time series

Forecast with Facebook Prophet and CALL

Electronics engineer, fond of computer science, embedded solution developer.