Warp 10 3.0 is a major release of the most advanced time series platform. It retains compatibility with the previous standalone version, and drops HBase to implement the first production ready TSDB based on FoundationDB.

On June 30, 2023, we released Warp 10 version 3.0.0, after several community-tested alpha and beta versions.

You can download Warp 10 release 3.0.0 on warp10.io, and find several resources to help you with your migration here.

Today's challenge is to sum up in a short article one year of work, and around 130 pull requests!

Backend changes

- Warp 10 Distributed: Goodbye HBase, hello FoundationDB.

- The distributed version of Warp 10 is now compatible with latest Kafka versions.

- Warp 10 Standalone: LevelDB backend is the same as Warp 10 2.x, there is no need to migrate data.

- Introduction of the Standalone+ version, relying on a FoundationDB backend.

Goodbye Java 8

With the new backend, we removed dependencies that were compatible with Java 8 only. You can now use JDK up to the latest LTS (jdk 17), and enjoy the performance evolution. We removed most of deprecation warnings for future releases, work is in progress.

Ops changes

bin/warp10-standalone.shis now renamedwarp10.sh, and doesn’t need to be modified. Modifications should be done inetc/warp10-env.sh. Update is therefore simplified, just overwritewarp10.shwith the new one.- The runner directory, formerly named

warpscriptsis now more logically namedrunners. - You can now symlink configuration files.

- Warp 10 can monitor itself without the Sensision external tool. To understand or customize this feature, look at

runners/sensision/60000/sensision-update.mc2. - Added more SystemD performance throttling settings to default Warp 10 unit (

bin/warp10.service), because Docker is not the only solution to limit CPU usage per application. - Bootstrap that enforces user creation was removed. You can now install and run Warp 10 as the current user on your computer for tests purpose with just two commands.

Datalog NG (Next Generation)

Datalog is the name given to the replication mechanism available in the standalone version of Warp 10 to build high availability infrastructures. Replication can be either complete or partial, in which case the process is called sharding.

While it did serve its purpose rather well, the design of the datalog in Warp 10 2.x had a certain number of issues:

- All the participating nodes needed to recognize the same tokens as valid, therefore forcing them to use the same keys for token security. This also subjected the forwarding operations to failures related to expired tokens.

- Throttling was an issue after a node was off for some time

- As tokens are used for forwarding changes, the changes were grouped according to producer, owner and application as set in the token. This grouping was done to ensure that changes for all data labeled with the same producer/owner/application triptych happened in the order in which they were received. This worked great to ensure this integrity constraint, but it failed poorly at scaling the forwarding since data changes for a given producer/owner/app were forwarded by a single thread.

The new Datalog mechanism, or Datalog NG (Next Generation) differs substantially from the original Datalog while retaining the same objective, to enable replication and sharding of data across a set of standalone Warp 10 instances.

Datalog NG abandons:

- the push architecture of the original Datalog to adopt a pull architecture where replicas (consumers) are responsible for fetching data changes from feeders.

- the use of tokens, replicas will apply changes at a deeper level in the Warp 10 instance thus not needing tokens anymore and therefore solving two of the issues of the original Datalog.

- the one file per request paradigm with the adoption of a log file which gathers data changes up to a certain size or age.

Capabilites everywhere

Warp 10 2.x had a few hardcoded secrets in its configuration, and there was no way to go beyond configured hard limits. Warp 10 3.x replaces AUTHENTICATE and all the secrets with more than 50 fine grained capabilities. You can now create tokens for applications and users that give them different rights easily.

- Capabilities have now hardcoded names, and there are all integrated in our documentation.

- Some capabities values are empty, some are regexp filters (for example url allowed for

HTTPorREXEC), some are numeric limits (for example,MAXOPSmax value can be a capability).

Two new mechanisms can help transition from 2.x:

- Default token attributes.

- Macro filter authentication plugin: each token can be changed on the fly.

Performance improvements

- Improved order preserving base64 encoder

- New

BUCKETIZEimplementation to improve performance. Custom bucketizers are the first beneficiaries, others will follow in future releases. - Improved sorted merge

- As the JDK improves, Warp 10 speeds up.

Extension merged

- Debug extension (

STDOUT…) is now part of WarpScript core. - LevelDB extension (the one that allow fast purge to reclaim disk space) is now part of WarpScript core.

- WarpFleet extension (

WF.ADDREPO…) is now part of WarpScript core.

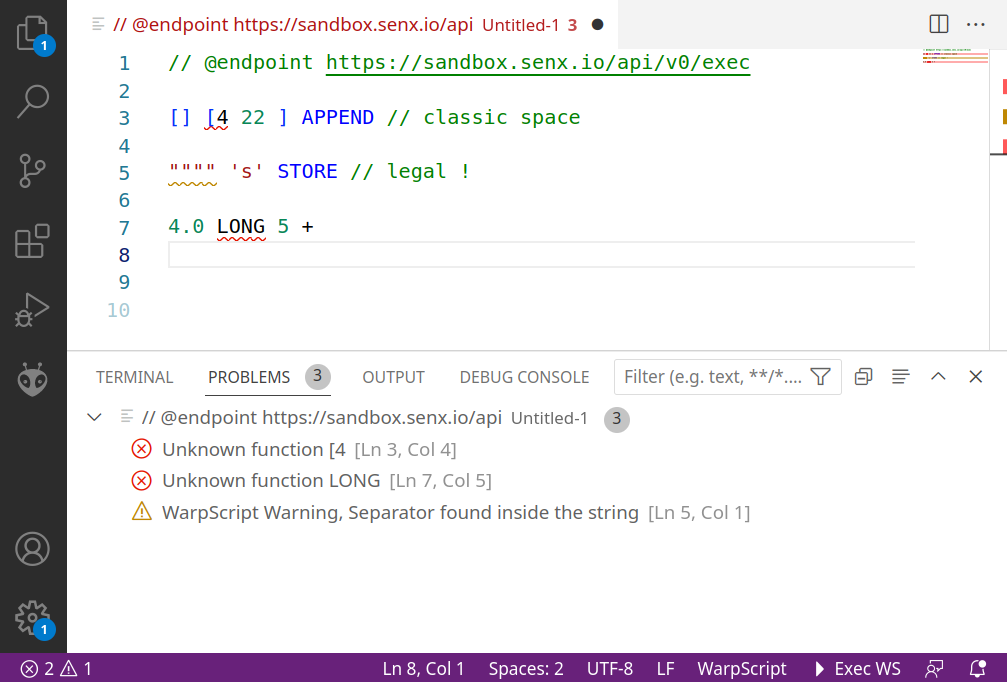

WarpScript parser rewrite

With the new WarpScript parser, you know exactly where in your source code an error occurred, with the line number and character position. It also supports dry-run to early detect syntax errors. This is already implemented in VSCode plugin, and coming soon to WarpStudio.

WarpScript various improvements

- Introduction of

LSTORE,MSTORE, to store multiple macro input parameters in multiple variables easily. - Introduction of all the PGP-related functions.

- Introduction of the

SLEEPfunction. - Introduction of

XEVAL. - Introduction of

mapper.latandmapper.lon. - Added

keepemptyoption toFETCH, to combineFINDandFETCHin a single operation. MAPlist syntax can have less than 5 elements.[ $gts $mapper ] MAPis now legal.MUTEX,HTTPhas now a timeout- fix edge case in

ASREGS - fix escaping in

REOPTALT - fix an issue in

BUCKETIZE.CALENDAR - fix

IDENTto returnwarp.identconfiguration. - fix

MACROCONFIGDEFAULTlookup. - fix

mapper.npdf. - fix

STOPbehavior withinTIMEBOX. - fix

SHRINKwhen GTS is already smaller than requested. - fix

VALUESPLITon boolean GTS. - fix edge case in FETCH with pagination (

gskip,gcount) on non-sorted data. - WarpRun (tool to run WarpScript outside Warp 10) can now take parameters.

echo "+" | java -classpath warp10/build/libs/warp10.jar -Dwarprun.format=json \

io.warp10.WarpRun - hello world

Removed functions

- We removed the following functions from WarpScript:

AUTHENTICATE,CHUNKENCODER,CUDF,DOC,DOCMODE,FROMBITS,HLOCATE,ISAUTHENTICATED,LEVELDBSECRET,MACROCONFIGSECRET,MAXURLFETCHCOUNT,MAXURLFETCHSIZE,STACKPSSECRET,TOBITS,TOKENSECRET,UDF,URLFETCH,WEBCALL,bucketizer.count.exclude-nulls,bucketizer.count.include-nulls,bucketizer.count.nonnull,bucketizer.join.forbid-nulls,bucketizer.max.forbid-nulls,bucketizer.mean.circular.exclude-nulls,bucketizer.mean.exclude-nulls,bucketizer.median.forbid-nulls,bucketizer.min.forbid-nulls,bucketizer.percentile.forbid-nulls,bucketizer.sd.forbid-nulls,bucketizer.sum.forbid-nulls,mapper.count.exclude-nulls,mapper.count.include-nulls,mapper.count.nonnull,mapper.join.forbid-nulls,mapper.max.forbid-nulls,mapper.mean.circular.exclude-nulls,mapper.mean.exclude-nulls,mapper.median.forbid-nulls,mapper.min.forbid-nulls,mapper.percentile.forbid-nulls,mapper.sd.forbid-nulls,mapper.sum.forbid-nulls,mapper.var.forbid-nulls. - An audit tool is available to help you to spot evolutions and to help you to fix existing WarpScript easily.

- There will soon be a toolkit of special macros available to emulate the removed functions (stay close, it is not public yet).

Going further

Start your migration now:

- Download version 3.0.0 here

- WarpScript audit tool for Warp 10 3.0

- How to migrate to Warp 10 3.0?

- Choose your Warp 10 3.0 flavor

Have questions about Warp 10? Join the Lounge to ask the community.

And if you need help with your migration, contact us to discuss.

Read more

All you need to know about interactions between Warp 10 and Python

Archiving Time Series Data into Amazon S3

May 2021: Warp 10 releases 2.8.0 and 2.8.1

Electronics engineer, fond of computer science, embedded solution developer.