Warp 10 a very versatile platform to handle any sequence of values. In this experiment we show it is even possible to store and stream videos in real-time!

In a previous post, Pierre showed it is possible to display a sequence of images on a small screen using Warp 10. Sequences of images fit well the Geo Time Series model because it can be simply defined as timestamped JPG images. However, it is not the most efficient way of representing a video, as shows the wealth of video codecs.

So I wondered if it was possible to store an encoded video and stream it back in real-time on a web player, all of that using Warp 10. Spoiler alert: it is!

Store

You can find the code of this part on this gist.

Prepare the Video

I chose the first episode of our WarpTV series in 720p format for this experiment. I preferred a mp4 video with a non-exotic codec and a single audio track to simplify things, but several audio tracks should be possible.

For the video to be streamed, the mp4 needs to be fragmented which can be done with mp4fragment from the Bento4 toolkit. We will use other tools from this toolkit in this post, so if you intend to follow this experiment, you should download its last version.

The fragmented mp4 is a single file, so you need to split each segment into several files using mp4split. Each of these split must also be linked to their starting timestamp. You can use mp4info and mp4dump --verbosity 1 on the fragmented video to get the relevant information.

| Video: Etch-a-Time Series: a RaspberryPi, a laser, and Warp 10… |

Upload to Warp 10

Now that we have audio and video segments associated to their timestamp, we want to upload these to a Warp 10 instance. The catch is that values are limited to 64 kB by default, which can be overridden by configuration keys standalone.value.maxsize on standalone or ingress.value.maxsize on distributed version.

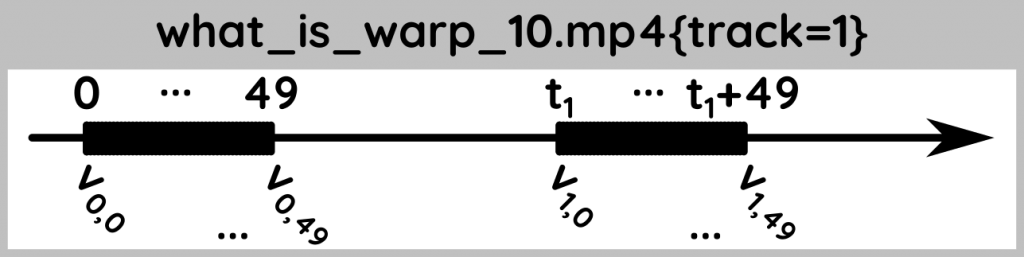

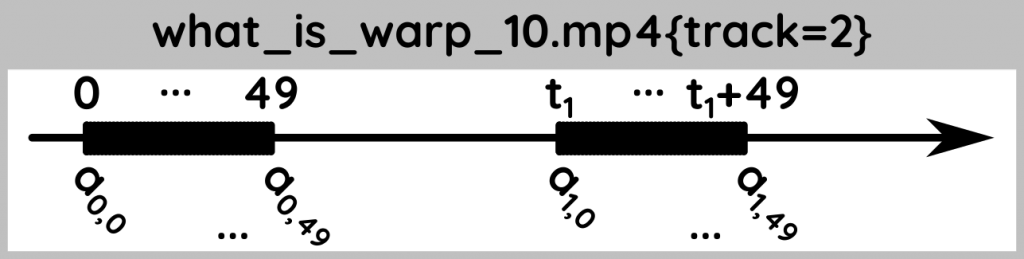

If you don't want to or cannot modify the configuration, a simple solution is to split the segments into N sub-segments and store them at timestamp ts, ts+1, …, ts+N-1 with ts the starting timestamp of the segment. As the default time unit in Warp 10 is microseconds, it is very unlikely that a segment starts a few microseconds after its predecessor. To keep things simple, you should keep N constant for the whole video. I chose 50 sub-segments per segment for this experiment because each segment was far less than 50*64 kB=3200 kB.

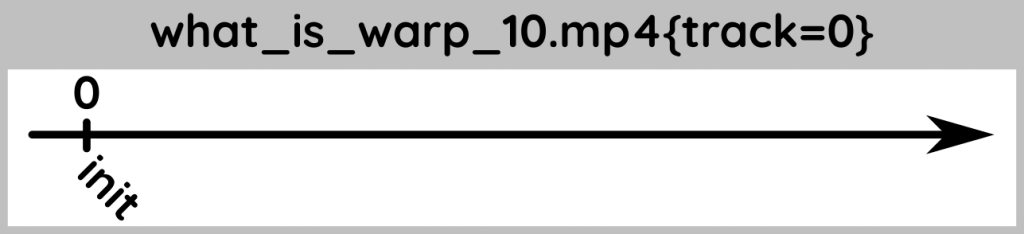

It's time to make GTSs and store them in Warp 10! We have an initialization segment, produced by mp4split. This is a special segment, so I decided to store it in a dedicated GTS. This segment is small, so it is not necessary to use the sub-segment trick.

Next, we have the video segments, which use the sub-segment trick. ts is the starting time of a segment, and t0=0. We also define vseg,subseg which corresponds to the bytes of the video sub-segment.

Finally, the audio segments, which use the exact same model as the video ones. Similarly, we define aseg,subseg as the bytes of the audio sub-segment.

Following these models, we can create a file following the GTS input format, which will use the new binary values introduced in version 2.1. You just have to convert each sub-segment file with base64 -w 0 and prefix it with b64:. Here is an extract of the file I produced:

0// what_is_warp_10.mp4{track=0} b64:AAAAHGZ0eXBtcDQyAAAAAGl...

0// what_is_warp_10.mp4{track=1} b64:AAACRG1vb2YAAAAQbWZoZAA...

1// what_is_warp_10.mp4{track=1} b64:FDjMNsEaEJrqQpDak5Ju9E6...

2// what_is_warp_10.mp4{track=1} b64:dJlSISitljFI8VUIRBFzqdT......

49// what_is_warp_10.mp4{track=1} b64:FNAkJ/4JFwrtI6i/eNpvgl...

0// what_is_warp_10.mp4{track=2} b64:AAAB+G1vb2YAAAAQbWZoZAA...

1// what_is_warp_10.mp4{track=2} b64:AAAAAAAAAAA3pwAAAAAAAAA...

2// what_is_warp_10.mp4{track=2} b64:AAAAAAAAAAAAAAAAAAAAAAA......

49// what_is_warp_10.mp4{track=2} b64:Cgv6ElhxRwsi7uas7luWYE...

100960000// what_is_warp_10.mp4{track=1} b64:AAABBG1vb2YAAAA...

100960001// what_is_warp_10.mp4{track=1} b64:T2Ghi3fEKub+Z7D......

100960049// what_is_warp_10.mp4{track=1} b64:tcJKljrgnaU+uC/...

100960362// what_is_warp_10.mp4{track=2} b64:AAAA5G1vb2YAAAA......

100960411// what_is_warp_10.mp4{track=2} b64:fMIMj8oq/dd9qhH...Now, simply curl -T that file and the first step is done!

Serve

You can find the code of that part on this gist.

We will now use the HTTP plugin to serve the segments. A single API endpoint is enough: http://sever:port/warpflix/data will return the init segment and http://sever:port/warpflix/data?start=s&end=e, with s and e the start and end timestamp of the data, in microseconds, will return the concatenated concerned video and audio segments. The resulting data may span after e because we cannot crop the segments without corrupting them.

Activate the HTTP plugin and add a single mc2 file to the HTTP directory. This file only defines the endpoint of your API and a macro. This macro has to check whether the start and end parameter are present and fetch the corresponding GTSs. If these parameters are missing, get the {track=0} at timestamp 0, else get the {track=~[12]} between s and e.

The last step is to concatenate all the values and convert them to bytes. Remember that binary values in GTSs are in fact ISO-8859-1 strings, so you have to convert them first with "ISO-8859-1" ->BYTES.

Play

You can find the code for that part on this gist.

To play back the segments we will use the MediaSource API which is available on most browsers and gives the level of flexibility we need.

Serving a static HTML page in WarpScript is easy. The content of the HTML is however much more tricky, especially if you want to be able to seek on the video, which I wanted to.

The general idea is to create a MediaSource and add 2 event listeners:

timeupdate, called when the player updates the current playback time.seeking, called when the current playback time is changed by a click of the user.

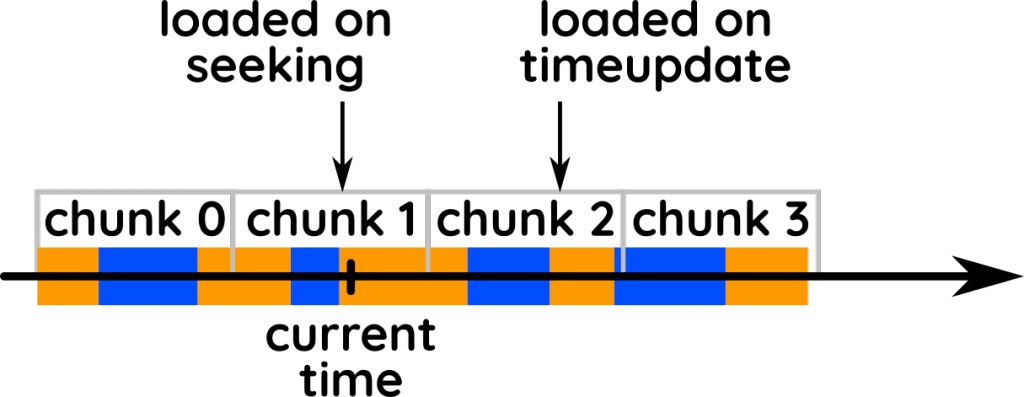

To simplify the code, I chose to load the segments by chunks of 2 seconds. The initialization loads the init segment and the first chunk. Then, when timeupdate is called, the next chunk is loaded if this is not already the case. When seeking is called, the current chunk is loaded if this is not already the case.

Conclusion

You can test the final result on our demo page. This is a crude implementation and a lot of improvements can be done, but this post shows streaming videos is possible with Warp 10.

Although there is little interest in storing videos in Warp 10, we covered several aspects of the platform, specifically how to:

- Model GTS for rather exotic data

- Convert binary data to GTS input format

- Manipulate binary GTSs in WarpScript

- Create an HTTP API

- Serve a static HTML page

Share with us your videos on Twitter.

Read more

The Raspberry Beer'o'meter

Truly Dynamic Dashboards as Code

Etch-a-Time Series: a Raspberry Pi, a laser and Warp 10

WarpScript™ Doctor