Learn how to compute and fix clock drift between two data historians on the same product.

– We collect data from our system, in real-time!

We often hear this sentence…

Defining real-time is not easy. The real-time of an energy supplier is not the real-time of a building landlord, not the real-time of weather data, not the real-time of thousands of low-energy devices.

The same sensor is more or less real-time, depending on what is measured, not the measured frequency. Measuring the temperature of a 1000-liter tank is not the same as measuring the temperature of a semiconductor. Inertia is not the same!

Working on real-time data

Two years ago, I got an interesting example of a real-time problem. Imagine a device where real time is “around 5 seconds”. It means the underlying physical phenomena that your sensors measure can have a significant variation in 5 seconds (the wind is a good example).

In this device, two computers gathered data from different sensors.

The problems:

- One of the computers was running Windows, and NTP sync was buggy out of the box.

- One of the computers was UTC (best practice for time series), and the other one was local time (Windows again).

- This situation went on for 2 years.

Anyway, data was ingested like this. In this article, I will explain how to fix this failure.

Fix the local vs UTC

This is the easiest part, Warp 10 has all the functions you need to fix that easily:

- ->TSELEMENTS and TSELEMENTS-> both accept a timezone argument, so you can know the delta for the current day.

- TIMESHIFT will apply a time offset to all the timestamps of the Geo Time Series you will read.

Here comes the dreadful clock drift

So, on the same product, both computer clock sources can drift from +/-300 seconds, 1s per day. The idea is the same… you need TIMESHIFT, but what is the offset for the day I want to process?

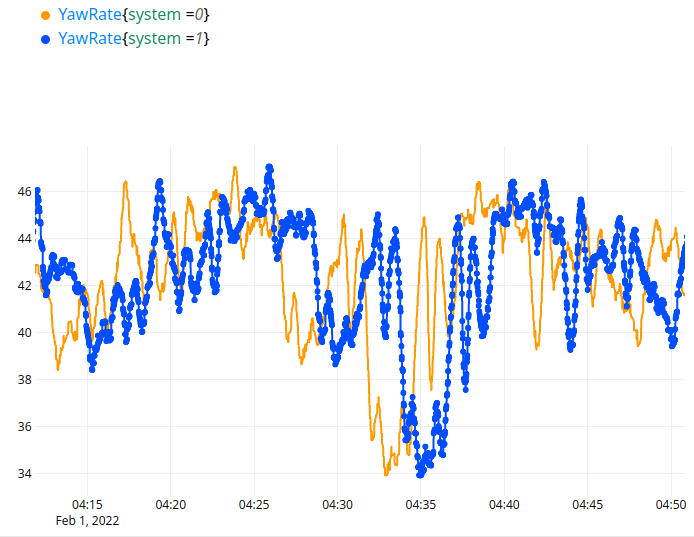

If you want to get out of this situation, you have to find a common record among both computers. It is better if you find something continuously variable. As an example, in this system, I will take the yaw rate:

Both records come from two different sensors, with different sampling rates, but it should be enough to find a correlation between them. You can explore the curve with a macro hosted on our sandbox:

// @endpoint https://sandbox.senx.io/api/v0/exec

@test/clockDriftEx 'inputs' STORE

Align data

To be able to use the correlation function, data must be aligned. As a well-trained Warp 10 user, you think BUCKETIZE, and you are right:

// @endpoint https://sandbox.senx.io/api/v0/exec

@test/clockDriftEx 'inputs' STORE

1 s 'bucketSpan' STORE

// bucketize

[ $inputs bucketizer.mean 0 $bucketSpan 0 ] BUCKETIZE 'inputsSynced' STORE

// linear interpolation

$inputsSynced INTERPOLATE SORT

'inputsSynced' STORE

$inputsSynced Compute correlation

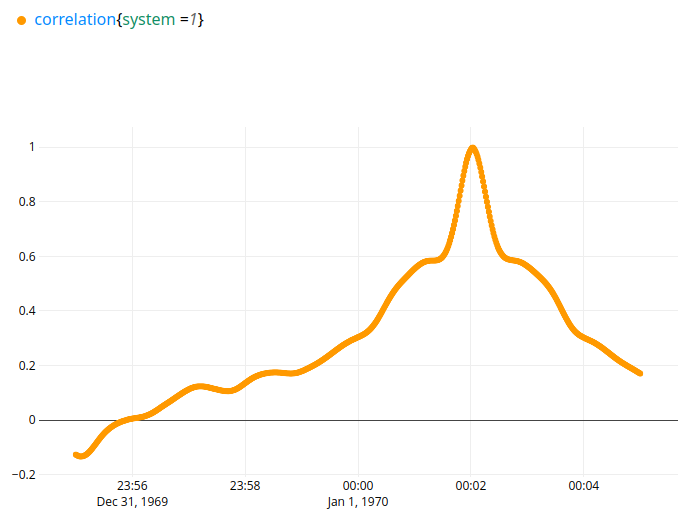

CORRELATE function is magic. It can try different offsets, and the result is also a GTS. Add this code, and look at the output:

// compute correlation

$inputsSynced 0 GET // base GTS

[ $inputsSynced 1 GET ] // list of the GTS to compare to

[ -300 300 <% $bucketSpan * %> FOR ] // search for a correlation with a -300s to +300s offset

CORRELATE 0 GET 'correlation' RENAME

'correlation' STORE

$correlation

You see the correlation (value in [-1;1] range, greater is the best), for the time offset you asked (between [-5;5] minutes).

Obviously, on this day, the drift between both systems was around 2 minutes.

Look for the best correlation

Now, you need to look at the maximum value, and the maximum value’s timestamp. If there is no obvious correlation, it is better to fail (for example, if the drift is greater than 5 minutes). This can be done in a few more lines:

// Correlation should have a maximum with a value close to 1.

$correlation VALUES MAX 'maxCorrelation' STORE

// fails if not greater than 0.95

$maxCorrelation 0.95 > 'cannot find a correlation greater than 0.95, abort' ASSERTMSG

// keep the datapoint equal to the max

$correlation $maxCorrelation == 'maximumCorrelationGts' STORE

// fails if there is more than one maximum

$maximumCorrelationGts SIZE 1 == 'multiple correlation maximum found, cannot find the right correlation' ASSERTMSG

// return the tick of max correlation

$maximumCorrelationGts TICKLIST 0 GET 'clockDrift' STORE

// display iso duration

$clockDrift ISODURATION

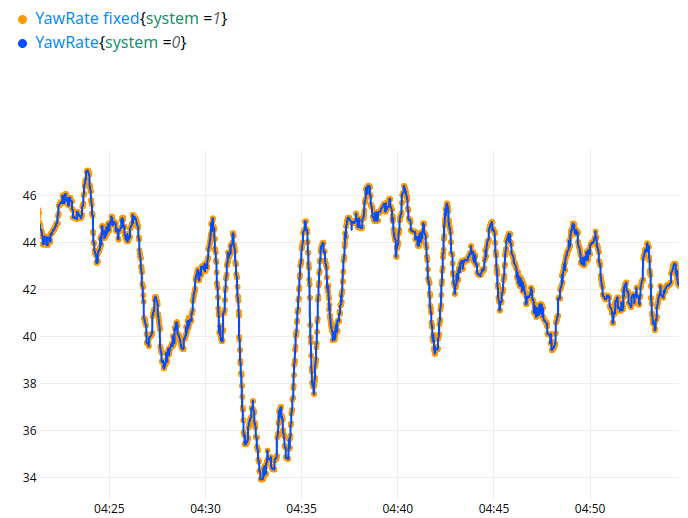

The result is 2 minutes, 2 seconds.

Final result

You can automate the drift computation every day, and store the clock drift value in a GTS in your database.

Once done, everyone in the company can call a macro to fix the offset. It is just a TIMESHIFT. To see the result, add the following to the code:

// display system 0

$inputs 0 GET

// shift the system 1 data (-1 * drift time shift)

$inputs 1 GET $clockDrift -1 * TIMESHIFT '+ fixed' RENAME Here is the result:

Takeaway

Of course, you can also build a data historian with open source software on Linux, see this article. But even so, you can be offline a for a long time, and you cannot rely on NTP to sync all your sub-systems.

Design failures like these do happen. No one can see that during the product development phase. Once your products are in the wild, there is no easy fix, you need to be able to cope with the data you have from them.

Collecting time series data is good, but to fix such problems, you may consider Warp 10. Contact us to discuss about it.

Read more

12 tips to apply sliding window algorithms like an expert

How to synchronize Timestamps

September 2020: Warp 10 release 2.7.0, ready for FLoWS

Electronics engineer, fond of computer science, embedded solution developer.