Warp 10 can be connected to many external data sources. Learn how WarpScript can interact with QuestDB thanks to its JDBC extension.

QuestDB is a database that is primarily built for time series. You can query it using a variant of SQL, augmented for manipulating time-series. In a way, it is very similar to TimeScaleDB. On the opposite, Warp 10 takes a very different approach, using WarpScript to fetch and manipulate time series, which does not look like SQL at all.

So is it possible to get the best of the two worlds, using QuestDB for storage and fetching, and WarpScript for time series manipulation?

Good news, everyone! It is!

Here Comes JDBC

We can use JDBC to connect to QuestDB, that's one of the advantages of being SQL-compliant. On the Warp 10 side, will we need the JDBC WarpScript Extension to be able to connect to QuestDB through JDBC.

In the following, I will deploy QuestDB and Warp 10 using Docker for the sake of simplicity, and for you to be able to reproduce the results.

For the QuestDB part, simply run:

docker run -p 9000:9000 -p 8812:8812 questdb/questdbFor the Warp 10, it will be less easy because we need to set up the JDBC extension. First, we have to start the container with a volume, here on /tmp, to be able to add jars and change the configuration. We also need the container to have access to QuestDB on the host, we do that with --network="host" on Linux:

docker run --network="host" -d -v /tmp/warp10:/data --name=warp10 warp10io/warp10:2.7.0Then, we need to add the extension using the WarpFleet CLI. See this blog post to see how to install it. You can then run:

wf g -w /tmp/warp10/warp10 io.warp10 warp10-ext-jdbcQuestDB recommends using the official Postgres JDBC driver, so we add it to the jars loaded by Warp 10:

wget https://jdbc.postgresql.org/download/postgresql-42.2.14.jar -P /tmp/warp10/warp10/libAlmost done, we now have to configure Warp 10 to load the JDBC extension and use the Postgres driver:

echo "warpscript.extension.jdbc = io.warp10.script.ext.jdbc.JDBCWarpScriptExtension

jdbc.driver.postgres = org.postgresql.Driver" >> /tmp/warp10/warp10/etc/conf.d/70--extensions.confWe will also need to increase the limits for the last script, at the end of this post:

echo "warpscript.maxops = 100000

warpscript.maxops.hard = 2000000" >> /tmp/warp10/warp10/etc/conf.d/20-warpscript.confFinally, restart the warp10 container to load the extension:

docker restart warp10We now have two Docker containers, one running QuestDB and the other running Warp 10, ready to communicate with QuestDB.

Querying Data

Until now, our QuestDB is empty. I followed this tutorial to import the NYC Taxi data sample, in short download trips.csv and run:

curl -G http://localhost:9000/exec --data-urlencode \

"query=CREATE TABLE trips(pickupDatetime timestamp, \

dropoffDatetime timestamp, \

passengerCount int, \

tripDistance double, \

fareAmount double, \

tipAmount double, \

taxesAndTolls double, \

totalAmount double) \

timestamp(pickupDatetime);"

curl -i -F data=@trips.csv \

"http://localhost:9000/imp?name=trips&forceHeaders=true&overwrite=false×tamp=pickupDatetime"You can check if the data has been correctly imported with

curl -G http://localhost:9000/exec --data-urlencode \

"query=select pickupDatetime, avg(tripDistance) from trips timestamp(pickupDatetime) sample by 7d;"It should spit out the result with a count of 549.

Great! Now time to do the query in Warp 10. You can open WarpStudio or VSCode and paste the following code:

// QuestDB's endpoint

'jdbc:postgresql://localhost:8812/qdb'

// Default login/password

{ 'user' 'admin' 'password' 'quest' }

// SQL query

'SELECT pickupDatetime, avg(tripDistance) FROM trips timestamp(pickupDatetime) SAMPLE BY 7d;'

// No conversion to GTS for now

NULL

// Run the SQL query

SQLEXECYou should see the raw result of the query almost exactly what you got using the cURL. Note that while in the cURL result, timestamps are expressed as ISO8601, in WarpScript they are expressed in time units (by default microseconds) since Unix epoch.

Analyzing the Data

Warp 10 is communicating through JDBC with QuestDB, time to do some real WarpScript to analyze the data. Since service gratuity is unknown to us in France, tipping habits are seen as arcane. I wondered if the number of passengers has an impact on the tip and if this impact evolved during the last years.

WarpScript is not designed to work on tabular data but on times series, more precisely on Geo Time Series (GTS). So instead of getting data in a tabular way, we want to convert it to GTSs. Hopefully SQLEXEC can be given a List to generate GTSs.

// QuestDB's endpoint

'jdbc:postgresql://localhost:8812/qdb'

// Default login/password

{ 'user' 'admin' 'password' 'quest' }

// SQL query

'SELECT pickupDatetime, passengerCount, fareAmount, tipAmount FROM trips'

// Column to GTS conversion

// First element is the timestamp, it's pickupDatetime.

// Then we want GTSs with values from fareAmount and tipAmount.

// Notice passengerCount is not in this list, so it will be used as a label.

[ 'pickupDatetime' 'fareAmount' 'tipAmount' ]

SQLEXEC

// There is a point in the future in the data, get rid of it

NOW NOW TIMECLIPIf you execute this code in WarpStudio or VSCode, it may take a bit of time to display the result. Even if the execution of the code itself takes around half a second, displaying 14 times series totaling 367312 points makes JavaScript suffer.

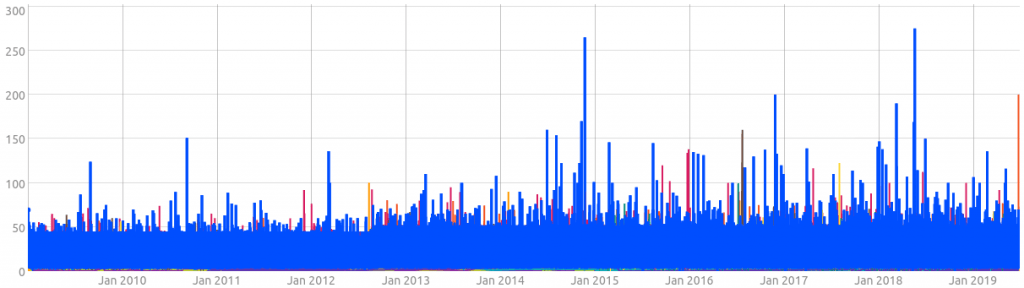

Anyway, here is what you should get:

What we want to do now it to compute tipAmount/fareAmount for each of the passenger counts. First, we have to separate tipAmount from fareAmount GTSs, using FILTER. Then we have to compute 1/fareAmount to be able to multiply it with tipAmount. We use that technique to use the REDUCE framework and its reducer.product.

In case fareAmount was 0, we make sure the values are finite with the MAP framework and its mapper.finite. Finally, to reduce the number of values, we group the values every quarter with the BUCKETIZE framework and its bucketizer.median.

Here is the complete script:

'jdbc:postgresql://localhost:8812/qdb'

{

'user' 'admin'

'password' 'quest'

}

'SELECT pickupDatetime, passengerCount, fareAmount, tipAmount FROM trips'

// Column to GTS conversion

// First element is the timestamp, it's pickupDatetime.

// Then we want GTSs with values from fareAmount and tipAmount.

// Notice passengerCount is not in this list, so it will be used as a label.

[ 'pickupDatetime' 'fareAmount' 'tipAmount' ]

SQLEXEC

// There is a point in the future in the data, get rid of it.

// Without getting into details, the flowing clips the data

// between Unix epoch and now.

NOW DUP TIMECLIP

'data' STORE

// Separate fareAmount from tipAmount

[ $data [] 'fareAmount' filter.byclass ] FILTER

[ SWAP -1 mapper.pow 0 0 0 ] MAP // Compute 1/fareAmount

'lFareAmount' STORE

[ $data [] 'tipAmount' filter.byclass ] FILTER

'lTipAmount' STORE

// Multiply tipAmount and 1/fareAmount to get the ratio.

[

$lTipAmount

$lFareAmount

[ 'passengerCount' ] // Separate passengerCount

reducer.product

] REDUCE

// Keep only finite values.

[ SWAP mapper.finite 0 0 0 ] MAP

// Aggregate on quarters.

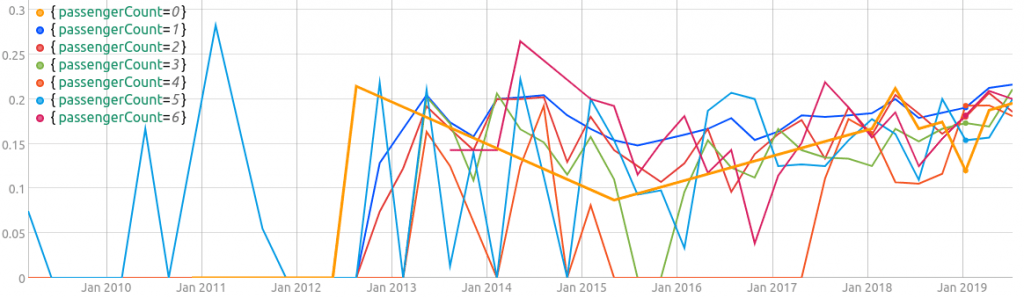

[ SWAP bucketizer.median 0 90 d 0 ] BUCKETIZEWhich produces this dataviz in VSCode:

What we can say from this result is that the quality of the data is poor until 2013. Between 2013 and 2017, the quality is better, but I still find it hard to believe there are so many stiffers (people who don't tip). Remember that this is a median on 3 months so a value of 0% means more than 50% of the people didn't tip during those 3 months.

Finally, 2018 and 2019 data seems to be good and also answer to my question: the number of passengers in a taxi does not impact the tip percentage. It also seems that there is a slow upward trend in the tip percentage, which was around 20% at the end of 2019.

Storing the Data

With the JDBC extension, it is possible to query data but also create a table and insert data into it. We will store our WarpScript results into QuestDB using the exact same SQLEXEC function.

There are several catches however. SQLEXEC supports batch insert using a PreparedStatement but when used on QuestDB, some data points were missing after the inserts. We can circumvent this by inserting point per point, each with a SQL statement. This raises a second issue: data points in QuestDB must be inserted in chronological order. This can be done by creating each row and then sorting them by date. Here's how this is done:

// Save the configuration for latter use.

<%

'jdbc:postgresql://localhost:8812/qdb'

{

'user' 'admin'

'password' 'quest'

}

%>

'conf' STORE

@conf

'SELECT pickupDatetime, passengerCount, fareAmount, tipAmount FROM trips'

[ 'pickupDatetime' 'fareAmount' 'tipAmount' ]

SQLEXEC

NOW

DUP TIMECLIP

'data' STORE

[ $data [] 'fareAmount' filter.byclass ] FILTER

[ SWAP -1 mapper.pow 0 0 0 ] MAP

'lFareAmount' STORE

[ $data [] 'tipAmount' filter.byclass ] FILTER

'lTipAmount' STORE

[ $lTipAmount $lFareAmount [ 'passengerCount' ] reducer.product ] REDUCE

[ SWAP mapper.finite 0 0 0 ] MAP

[ SWAP bucketizer.median 0 90 d 0 ] BUCKETIZE

// Store the result of our analysis.

'result' STORE

// Create the table

@conf

'CREATE TABLE tipratio(ts timestamp, tipRatio double, passengerCount long) timestamp(ts)'

NULL

SQLEXEC

DROP

// Create all the rows first.

[]

$result

<%

'gts' STORE

$gts LABELS 'passengerCount' GET TOLONG 'passengerCount' STORE

$gts

<%

'point' STORE

[

$point 0 GET ISO8601

$point 4 GET

$passengerCount

] +!

%>

FOREACH

%>

FOREACH

// Sort the rows per timestamp.

<% 0 GET %>

SORTBY

// Now, insert row by row.

<%

'row' STORE

@conf

[ $row ]

<'

INSERT INTO tipratio (ts, tipRatio, passengerCount) VALUES (to_timestamp(?, 'yyyy-MM-ddTHH:mm:ss.SSSUUUz'), ?, ?)

'>

NULL

SQLEXEC

%>

FOREACHThat's it! You can now query the data from WarpScript or directly from QuestDB with select * from tipratio.

Takeaways

With the help of the SQL extension, it is possible for WarpScript to interact with other SQL-compliant databases, including the time series database QuestDB. This includes querying, creating tables and inserting values.

The SQLEXEC function takes the URL, credentials, and SQL statement to execute. If it's a SELECT this function returns either the raw table or its conversion to GTSs.

SQL is not the other way of connecting external source of data, take a look at how Warp 10 can interact with Kafka, S3, and MQTT for instance.

Read more

Thrift and Protocol Buffers support in WarpScript

Send an Email with Warp 10 through Microsoft Graph

Warp 10 Office Hours #1 Recap

WarpScript™ Doctor