Managing historical time series data at scale can be challenging, especially when it comes to storage. Warp 10 HFiles is a cloud native approach which simplifies this task.

At SenX, time series are our DNA. Over the last 9 years, we have built what still stands today as the most advanced time series platform: Warp 10. Countless meetings with customers across industries have driven the design decisions which have shaped the Warp 10 suite of technologies.

For the last two years, we have worked on addressing one of the missing pieces of our ecosystem, a scalable and efficient cloud native solution for storing very large scale historical data while retaining complete analytics capabilities on those data.

This technology is called HFiles. It will revolutionize the way you handle time series data, greatly simplifying infrastructures and drastically reducing storage and usage costs.

The need for HFiles

A very common pattern we encounter when talking with customers about the way they manage their data is the periodic downsampling of data. Raw data may be collected at a frequency of one data point per minute, but that raw data will only be retained for a week. After that, it will be aggregated hourly and the original raw data will be deleted. The same process repeats every quarter, downsampling hourly data into daily data and removing the hourly data.

The rationale behind this process is always the same. We don’t need fine-grain data past an initial period. While this may be true for some applications, for the vast majority it is not the case. Discarding the original raw data means waiving opportunities for value creation.

But the real rationale is not what the no need for fine-grain data narrative seems to imply. The real rationale is that the infrastructure needed to handle the raw data at scale is simply unbearable, both technically and financially. Traditional time series databases will grow past the size that limited teams can handle. They will require additional disks then additional servers and performance will inevitably start to degrade.

This is something we experienced first-hand with some of our customers who were pushing data at high frequency in a standalone instance of Warp 10. Past a few hundreds of billions of data points, they were running into system administration issues. While they could solve those issues by migrating to the distributed version of Warp 10, they often did not want to go this route since it would require new skills.

This situation led us to design HFiles, short for History Files.

What are HFiles?

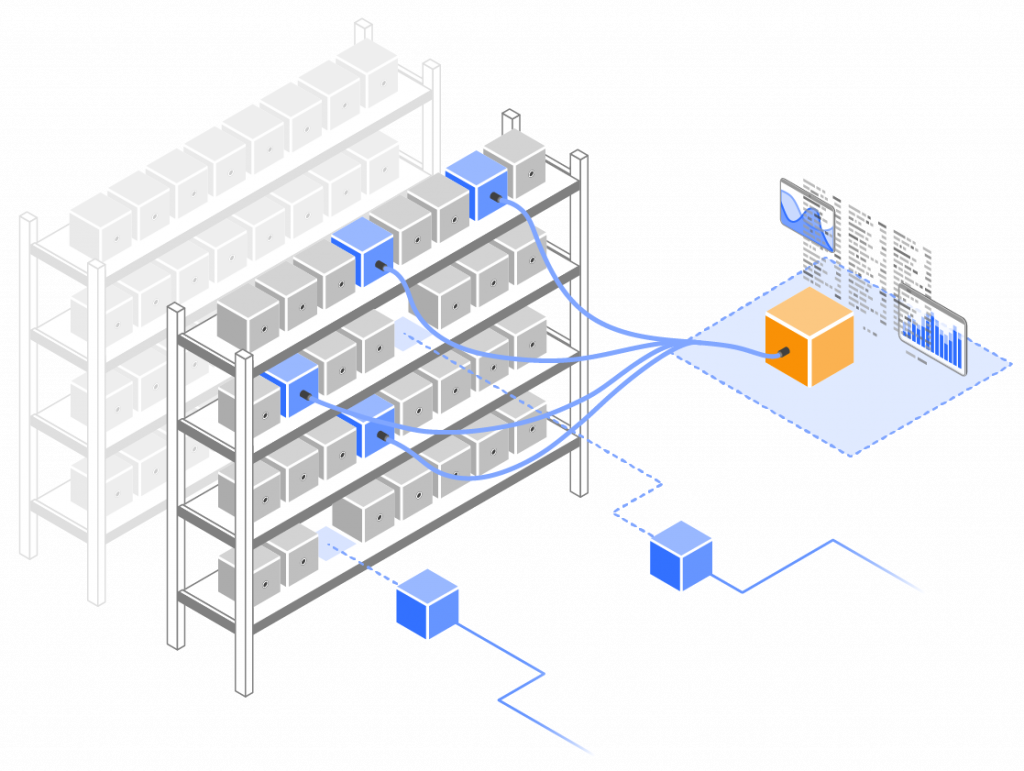

HFiles are high-density immutable files that can each contain billions of data points from millions of series. Those files can be stored on any medium, whether a local or network filesystem, distributed filesystems such as HDFS, Ceph, Lustre, or MooseFS, or object storage services like AWS S3, Azure Blob Storage, or Google Cloud Storage.

Those files can then be used in two ways:

- First, they can be mounted by a Warp 10 instance. This instance will instantly make the data in those files available for retrieval and analytics without requiring extra storage space.

- Second, the same HFiles can be manipulated using tools such as Spark or Flink.

We believe, and our customers now believe too, that HFiles fill a gap in existing data infrastructures. We have explained in a previous post, why using general-purpose file formats such as Parquet is far from ideal for time series data. On the contrary, HFiles were designed and integrated into the Warp 10 ecosystem to augment existing lakehouses. In this way, time series data can be used with high efficiency right next to the other types of data.

HFiles under the hood

HFiles store chunks of time series data that span a certain time range. The data for each time series is encoded in a compact way and the chunks are further compressed. The HFile also contains indexing information which is used to access only the required chunks.

These mechanisms put together lead to a very high storage density. The actual efficiency will, of course, depend on your data. But some of our customers have witnessed impressive results: a Machine Learning monitoring solution has stored over 300 billion data points (timestamp + value) from 6 million series in just over 90 GB.

Each .hfile HFile comes with two companion files, a .info file containing various information about the HFile, and a .gts file. All three files are described below.

.hfile

The .hfile contains the Geo Time Series chunks, the associated indices, and summary information.

The structure of those files is designed to enable both sequential scanning and random access. It makes them suitable for batch and ad-hoc processing.

Those files can contain an arbitrarily large number of data points. It can be hundreds of billions or even trillions pertaining to millions of series. They can be organized by time range. For optimal performance, it is advised that the time ranges of files containing a given series do not overlap.

.info

The .info file contains summary information about the .hfile, notably

- the time span covered by the file,

- the range of series it contains,

- the number of series and data points,

and some other details used by the HFile handling code to determine how various files should be used during queries.

The structure of the data in a .info file is a WarpScript snapshot of a list containing two elements, the name of a file and a map with the information above.

The content of this file is easily editable manually. It can be useful for example, if you want to modify the name of the file it relates to.

Lines from multiple .info files can be grouped in a single .info file which can be used to make multiple .hfile available in a Warp 10 instance.

.gts

The .gts file contains the list of series in the HFile. This information is already contained in the .hfile but it is made available in this additional file to reduce the amount of I/Os which needs to be performed when opening the HFiles. This has a positive impact on your cloud cost since the content of the .hfile does not need to be read fully.

Each line of the .gts file is a series of WarpScript statements which output a GTSEncoder with the metadata of a series present in the file.

Custom .gts files can be created with only a subset of the series. Those custom files can later be used when making the data available in a Warp 10 instance to expose only a subset of the series in the HFiles.

Read more about Geo Time Series.

Practical HFiles

The support of HFiles leverages two components. The HFStore plugin allows reading data in HFiles, whether they are mounted in a Warp 10 instance or accessed from a batch job. This plugin is freely available and now part of the official Warp 10 docker image.

The production of HFiles is managed by the HFSProducer extension. This extension is available as part of our enterprise-level support subscriptions and is included in all instances of our cloud product. It is also available on a pay-as-you-go basis via the HFiles generation service.

HFStore plugin and extension

The HFStore plugin adds support for reading data from HFiles to a Warp 10 instance. The plugin manages a set of History Files Stores (HFStores) which are collections of HFiles.

Those HFStores can either be configured statically to be loaded at instance startup or can be dynamically added and removed.

The plugin loads an associated extension which exposes functions such as HFTOKEN and HFFETCH to read data from the HFStores.

The plugin also exposes the data in the HFStores via a dedicated /api/v0/fetch endpoint.

The HFStore plugin also contains code to read data in the HFiles from Spark jobs. This usage will be covered in a future article.

HFSProducer extension

The HFSProducer extension adds functions such as HFDUMP. It allows the generation of HFiles from data stored in a Warp 10 instance.

The HFSProducer add-on also contains code for generating HFiles from Spark jobs and a utility called hfadmin. This utility can be used to generate HFiles from raw or gts dumps of Warp 10 data and from InfluxDB line protocol data. That same utility also allows to merge and process HFiles, for example, to apply a RANGECOMPACT on its data or to filter out specific series or time ranges.

HFiles generation service

This service allows you to upload data in various formats (Warp 10 raw or gts format, Influxdb line protocol) and retrieve the same format encoded as a HFile.

The service is billed based on the number of converted data points.

A future article will describe this service in detail. In the meantime, if you are interested by the service, please contact us directly.

Experiencing HFiles

In order to experience HFiles hands-on, we have created a few demo HFiles and made them available on a public object-store. The following steps will guide you through the use of the latest Warp 10 Docker image to mount a test HFile and interact with it. We assume for the rest of that demo that you can access a Linux machine.

Launching a container running the Warp 10 Docker image

Running a Warp 10 instance using the Docker image with an external volume is a prerequisite. It can be done simply by running the following commands:

mkdir /var/tmp/warp10.dockern

docker run -p 8080:8080 -p 8081:8081 -p 4378:4378 u002du002dvolume=/var/tmp/warp10.docker:/data warp10io/warp10

When run for the first time, this will create the directory hierarchy in the persistent volume. After the first execution, kill the docker container. You will need to start it again once the HFiles bucket is mounted.

Mounting the demo HFiles bucket

Before you can access the data in some HFiles, they must be visible to the Warp 10 instance.

We will use s3fs to mount the bucket containing the demo HFiles under a directory on the machine running the Warp 10 container.

# Create a file with the credentials to access the hfiles bucket

echo hfiles:57sy6gF3VDnJ6XF u003e /var/tmp/warp10.docker/hfiles.pass

chmod 400 /var/tmp/warp10.docker/hfiles.pass

# Mount the bucket under /var/tmp/warp10.docker/warp10/hfiles

s3fs -f /var/tmp/warp10.docker/warp10/hfiles -o host=https://hfiles.senx.io,bucket=hfiles,passwd_file=/var/tmp/warp10.docker/hfiles.pass,umask=0222,allow_other,use_path_request_style,nonempty

You should see content similar to the following in directory /var/tmp/warp10.docker/warp10/hfiles:

.

├── malizia

│ ├── malizia.gts

│ ├── malizia.hfile

│ └── malizia.info

└── rPlace

├── pixels.gts.gz

├── pixels.hfile

└── pixels.info

Relaunch your Warp 10 instance

Rerun the following command:

docker run -p 8080:8080 -p 8081:8081 -p 4378:4378 u002du002dvolume=/var/tmp/warp10.docker:/data warp10io/warp10

The id of the running container can be viewed using docker ps. It will be referred as CONTAINER_ID in the rest of this post.

Opening a HFStore

Opening a HFiles Store means instructing Warp 10 that a given directory contains some .hfile that it should make available under a store name.

This operation is performed using the HFOPEN function. But the Warp 10 Docker image contains a handy utility called hfstore which wraps that call. It generates on the fly a temporary token with the required capability to call it.

The hfstore call needs a store name, the path to the directory containing the HFiles, and also to a .info file listing the .hfile to add to the store.

Issue the following command:

docker exec CONTAINER_ID hfstore open malizia /data/warp10/hfiles/malizia malizia.info

This will create a HFStore called malizia which will expose the malizia.hfile HFile mentioned in malizia.info. This HFile contains data from the Team Malizia sailboat during the Vendée Globe Challenge. This is the very same data used in this previous article on our blog.

You can get a summary of what the newly created malizia HFStore contains by issuing the following command:

docker exec CONTAINER_ID hfstore info malizia

The output should look like this:

[

{

u0022infosu0022:{

u0022gtsu0022:419,

u0022valuesu0022:171418636,

u0022mintsu0022:1604565256139000,

u0022maxtsu0022:1611842365625000,

u0022ownersu0022:[u002200000000-0000-0000-0000-000000000000u0022],

u0022producersu0022:[u002200000000-0000-0000-0000-000000000000u0022],

u0022applicationsu0022:[u0022testu0022],

u0022filesu0022:1,

u0022fileorderu0022:u0022MAXTSu0022,

u0022hfiles.sizeu0022:721446050,

u0022overlapu0022:falsen },

u0022filesu0022:[u0022malizia.hfileu0022]

}

]

This shows that the HFStore malizia contains one file with 172,418,636 data points from 419 series.

Registering the Geo Time Series

After the hfstore open call the data is known to the Warp 10 instance. But it cannot yet be queried because the series contained in the HFiles are not yet known to the Warp 10 instance.

We need to register the series we want to access by instructing the HFStore plugin to load them in the Warp 10 Directory.

This is done using the HFINDEX function. In the case of the Warp 10 Docker instance, the following call will register all series listed in malizia.gts:

docker exec CONTAINER_ID hfstore register malizia /data/warp10/hfiles/malizia/malizia.gts

Generating a token

The last step before the data can be used is to generate a token suitable for reading the data.

The HFStore function to use for this purpose is HFTOKEN, or, in the case of a Warp 10 Docker instance:

docker exec CONTAINER_ID hfstore token malizia '1 d'

which will generate a token valid for one day (1 d). The token will be output and will be referred to as TOKEN in the rest of this article.

Accessing data

The data from the malizia HFile Store can now be accessed. There are multiple ways this can be done.nnFirst, the Warp 10 Docker image exposes on port 4378 two endpoints /api/v0/find and /api/v0/fetch which are connected to the Directory and HFile Store.

The following commands will list the GTS known to the directory and fetch 1000 data points of the GTS com.team-malizia.NRJ_Solar_cal.ISolar.

TOKEN=TOKEN

# List GTS

curl -g u0022http://127.0.0.1:4378/api/v0/find?token=${TOKEN}u0026selector=~.*{}u0022

# Read GTS com.team-malizia.NRJ_Solar_cal.ISolar

curl -g u0022http://127.0.0.1:4378/api/v0/fetch?token=${TOKEN}u0026selector=com.team-malizia.NRJ_Solar_cal.ISolar{}u0026now=nowu0026count=1000u0022

The second way data can be accessed is by using the HFFETCH function in WarpScript code submitted to the normal /api/v0/exec endpoint of your instance.

Unregistering Geo Time Series

The registration of GTS in the directory will persist across restarts of your Warp 10 instance. But at some point in the future, you might be willing to remove the registered series from the directory, for example, because you no longer mount the HFiles that they point to.

This process is easily done using hfstore purge which will generate a series of curl commands with tokens suitable for deleting registered series. There may be multiple such commands since the series in the HFiles may have very diverse owners, producers, or applications. It is therefore necessary to generate multiple tokens to cover them all.

The generated curl commands assume that the deletion of metadata only is enabled in the configuration via the ingress.delete.metaonly.support key set to true.

Takeaways

We have presented the innovative HFiles support which opens an ocean of possibilities for many industries regarding time series data storage. It enables raw data to be retained at a very low cost while still being able to analyze them. This innovation reinforces the status of the most advanced time series platform of Warp 10.

We are eager to discuss with you how you could benefit from HFiles. Please reach out to us so we can schedule a short call to discover your challenges and identify how HFiles or other Warp 10 technologies could help you address them.

Read more

Captain's Log, Stardate 4353.3

3 ways to perform HTTP requests

Warp 10 beyond OSS

Co-Founder & Chief Technology Officer